ELK是ElasticSearch、Logstash、Kibana的简称,一般用于日志系统,从日志收集,日志转储,日志展示等入手,用以提供简洁高效的日志处理机制。

鉴于没有额外的机器,这里就用docker来简单模拟下一个简单ELK系统的部署和使用。

搭建Logstash

准备好镜像

docker search logstash // 省略输出

docker pull logstash // 省略输出

➜ logstash docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

es_test latest 43cf30ef8591 2 days ago 349MB

redis latest 0f55cf3661e9 9 days ago 95MB

logstash 5.5.2 98f8400d2944 17 months ago 724MB

elasticsearch 2.3.5 1c3e7681c53c 2 years ago 346MB

运行实例

➜ logstash docker run -i -t 98f8400d2944 /bin/bash

root@36134d90b5cd:/# ls

bin boot dev docker-entrypoint.sh docker-java-home etc home lib lib64 media mnt opt proc root run sbin srv sys tmp usr var

root@36134d90b5cd:/# cd /home

root@36134d90b5cd:/home# ls

root@36134d90b5cd:/home# cd ../

root@36134d90b5cd:/# ls

bin boot dev docker-entrypoint.sh docker-java-home etc home lib lib64 media mnt opt proc root run sbin srv sys tmp usr var

root@36134d90b5cd:/# cd /etc/logstash/

root@36134d90b5cd:/etc/logstash# ls

conf.d jvm.options log4j2.properties log4j2.properties.dist logstash.yml startup.options

root@36134d90b5cd:/etc/logstash# cat logstash.yml

发现没有logstash.conf(名字是随意的,logstash可以通过-f参数来指定对应的input,filter,output行为)

刚才pull到的镜像中没有vim等编辑器,所以不能直接手动编辑,所以为了使用自定义的配置文件,这里用docker的cp命令,把外部编辑好的配置文件共享到容器内部使用。

logstash docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

36134d90b5cd 98f8400d2944 "/docker-entrypoint.…" About a minute ago Up About a minute priceless_feistel

687bf289bb43 43cf30ef8591 "/docker-entrypoint.…" 5 minutes ago Up 5 minutes 0.0.0.0:9200->9200/tcp, 9300/tcp my_elasticsearch

➜ logstash docker cp logstash.conf 36134d90b5cd:/etc/logstash

logstash cat logstash.conf

input { stdin {} }

output {

elasticsearch {

action => "index"

hosts => "http://172.17.0.2:9200"

index => "my_log"#在es中的索引

}

# 这里输出调试,正式运行时可以注释掉

stdout {

codec => json_lines

}

#redis {

# codec => plain

# host => ["127.0.0.1:6379"]

# data_type => list

# key => logstash

#}

}

➜ logstash

配置文件中的172.17.0.2:9200 是elasticsearch在docker容器中的IP地址,具体的获取方式为:

➜ logstash docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

36134d90b5cd 98f8400d2944 "/docker-entrypoint.…" 9 minutes ago Up 9 minutes priceless_feistel

687bf289bb43 43cf30ef8591 "/docker-entrypoint.…" 13 minutes ago Up 13 minutes 0.0.0.0:9200->9200/tcp, 9300/tcp my_elasticsearch

➜ logstash docker inspect 687bf289bb43 | grep IP

"LinkLocalIPv6Address": "",

"LinkLocalIPv6PrefixLen": 0,

"SecondaryIPAddresses": null,

"SecondaryIPv6Addresses": null,

"GlobalIPv6Address": "",

"GlobalIPv6PrefixLen": 0,

"IPAddress": "172.17.0.2",

"IPPrefixLen": 16,

"IPv6Gateway": "",

"IPAMConfig": null,

"IPAddress": "172.17.0.2",

"IPPrefixLen": 16,

"IPv6Gateway": "",

"GlobalIPv6Address": "",

"GlobalIPv6PrefixLen": 0,

➜ logstash

测试logstash

如此,关于Logstash的准备工作算是完成了,接下来就要部署logstash服务了。

➜ logstash docker run -i -t 98f8400d2944 /bin/bash

root@36134d90b5cd:/etc/logstash# ls

conf.d jvm.options log4j2.properties log4j2.properties.dist logstash.yml startup.options

root@36134d90b5cd:/etc/logstash# cd conf.d/

root@36134d90b5cd:/etc/logstash/conf.d# ls

root@36134d90b5cd:/etc/logstash/conf.d# cd ..

root@36134d90b5cd:/etc/logstash# ls

conf.d jvm.options log4j2.properties log4j2.properties.dist logstash.yml startup.options

root@36134d90b5cd:/etc/logstash# ls

conf.d jvm.options log4j2.properties log4j2.properties.dist logstash.conf logstash.yml startup.options

root@36134d90b5cd:/etc/logstash# logstash -f logstash.conf

Sending Logstash's logs to /var/log/logstash which is now configured via log4j2.properties

03:00:36.516 [main] INFO logstash.setting.writabledirectory - Creating directory {:setting=>"path.queue", :path=>"/var/lib/logstash/queue"}

03:00:36.520 [main] INFO logstash.setting.writabledirectory - Creating directory {:setting=>"path.dead_letter_queue", :path=>"/var/lib/logstash/dead_letter_queue"}

03:00:36.552 [LogStash::Runner] INFO logstash.agent - No persistent UUID file found. Generating new UUID {:uuid=>"fc54205f-077a-4c07-9ae4-85f689c970b1", :path=>"/var/lib/logstash/uuid"}

03:00:37.093 [[main]-pipeline-manager] INFO logstash.outputs.elasticsearch - Elasticsearch pool URLs updated {:changes=>{:removed=>[], :added=>[http://172.17.0.2:9200/]}}

03:00:37.094 [[main]-pipeline-manager] INFO logstash.outputs.elasticsearch - Running health check to see if an Elasticsearch connection is working {:healthcheck_url=>http://172.17.0.2:9200/, :path=>"/"}

03:00:37.188 [[main]-pipeline-manager] WARN logstash.outputs.elasticsearch - Restored connection to ES instance {:url=>"http://172.17.0.2:9200/"}

03:00:37.200 [[main]-pipeline-manager] INFO logstash.outputs.elasticsearch - Using mapping template from {:path=>nil}

03:00:37.460 [[main]-pipeline-manager] INFO logstash.outputs.elasticsearch - Attempting to install template {:manage_template=>{"template"=>"logstash-*", "settings"=>{"index.refresh_interval"=>"5s"}, "mappings"=>{"_default_"=>{"_all"=>{"enabled"=>true, "omit_norms"=>true}, "dynamic_templates"=>[{"message_field"=>{"path_match"=>"message", "match_mapping_type"=>"string", "mapping"=>{"type"=>"string", "index"=>"analyzed", "omit_norms"=>true, "fielddata"=>{"format"=>"disabled"}}}}, {"string_fields"=>{"match"=>"*", "match_mapping_type"=>"string", "mapping"=>{"type"=>"string", "index"=>"analyzed", "omit_norms"=>true, "fielddata"=>{"format"=>"disabled"}, "fields"=>{"raw"=>{"type"=>"string", "index"=>"not_analyzed", "doc_values"=>true, "ignore_above"=>256}}}}}, {"float_fields"=>{"match"=>"*", "match_mapping_type"=>"float", "mapping"=>{"type"=>"float", "doc_values"=>true}}}, {"double_fields"=>{"match"=>"*", "match_mapping_type"=>"double", "mapping"=>{"type"=>"double", "doc_values"=>true}}}, {"byte_fields"=>{"match"=>"*", "match_mapping_type"=>"byte", "mapping"=>{"type"=>"byte", "doc_values"=>true}}}, {"short_fields"=>{"match"=>"*", "match_mapping_type"=>"short", "mapping"=>{"type"=>"short", "doc_values"=>true}}}, {"integer_fields"=>{"match"=>"*", "match_mapping_type"=>"integer", "mapping"=>{"type"=>"integer", "doc_values"=>true}}}, {"long_fields"=>{"match"=>"*", "match_mapping_type"=>"long", "mapping"=>{"type"=>"long", "doc_values"=>true}}}, {"date_fields"=>{"match"=>"*", "match_mapping_type"=>"date", "mapping"=>{"type"=>"date", "doc_values"=>true}}}, {"geo_point_fields"=>{"match"=>"*", "match_mapping_type"=>"geo_point", "mapping"=>{"type"=>"geo_point", "doc_values"=>true}}}], "properties"=>{"@timestamp"=>{"type"=>"date", "doc_values"=>true}, "@version"=>{"type"=>"string", "index"=>"not_analyzed", "doc_values"=>true}, "geoip"=>{"type"=>"object", "dynamic"=>true, "properties"=>{"ip"=>{"type"=>"ip", "doc_values"=>true}, "location"=>{"type"=>"geo_point", "doc_values"=>true}, "latitude"=>{"type"=>"float", "doc_values"=>true}, "longitude"=>{"type"=>"float", "doc_values"=>true}}}}}}}}

03:00:37.477 [[main]-pipeline-manager] INFO logstash.outputs.elasticsearch - Installing elasticsearch template to _template/logstash

03:00:37.626 [[main]-pipeline-manager] INFO logstash.outputs.elasticsearch - New Elasticsearch output {:class=>"LogStash::Outputs::ElasticSearch", :hosts=>["http://172.17.0.2:9200"]}

03:00:37.629 [[main]-pipeline-manager] INFO logstash.pipeline - Starting pipeline {"id"=>"main", "pipeline.workers"=>2, "pipeline.batch.size"=>125, "pipeline.batch.delay"=>5, "pipeline.max_inflight"=>250}

03:00:37.648 [[main]-pipeline-manager] INFO logstash.pipeline - Pipeline main started

The stdin plugin is now waiting for input:

03:00:37.814 [Api Webserver] INFO logstash.agent - Successfully started Logstash API endpoint {:port=>9600}

{"@timestamp":"2019-02-16T03:00:37.715Z","@version":"1","host":"36134d90b5cd","message":""}

{"@timestamp":"2019-02-16T03:00:37.681Z","@version":"1","host":"36134d90b5cd","message":""}

helloworld

{"@timestamp":"2019-02-16T03:00:53.619Z","@version":"1","host":"36134d90b5cd","message":"helloworld"}

what's up?

{"@timestamp":"2019-02-16T03:01:41.383Z","@version":"1","host":"36134d90b5cd","message":"what's up?"}

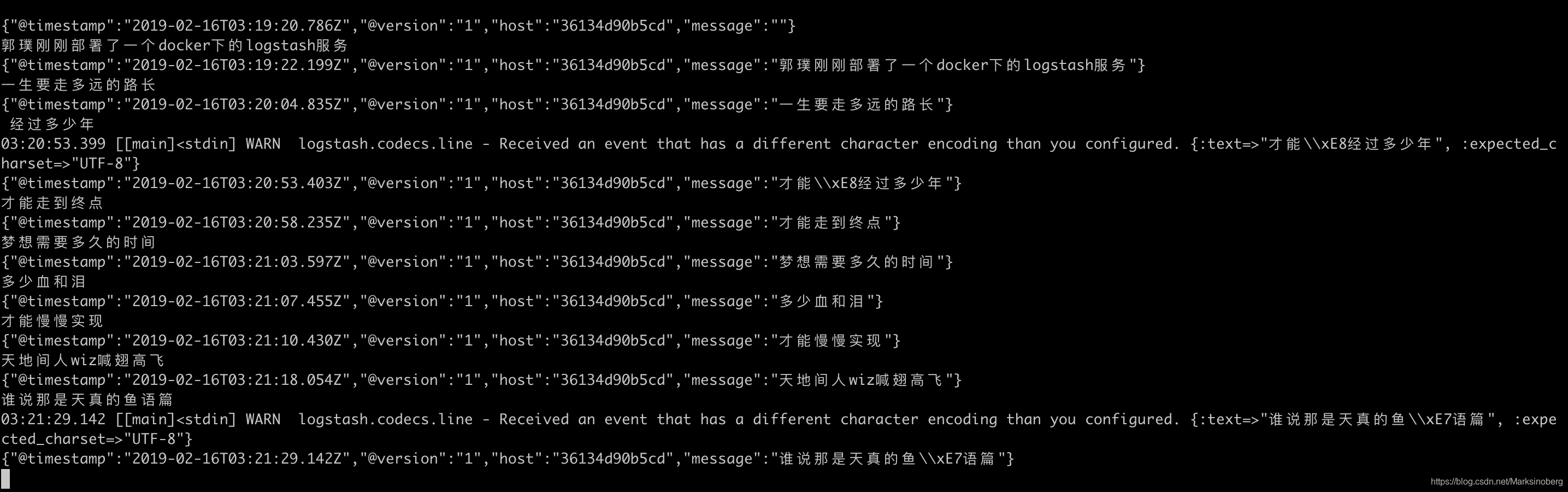

示例中的helloworld和what’sup? 是通过input的stdin测试的,没有报错,说明logstash已经能正确运行了。

搭建ElasticSearch

准备镜像

docker search elasticsearch // 省略输出

docker pull elasticsearch // 省略输出

由于单纯的通过HTTP去查看elasticsearch运行的信息,有点繁琐,所以这里学着网上的教程,自己创建一个Dockerfile,然后直接安装好head插件,这样就可以很轻松的在webUI上操作elasticsearch

Dockerfile

FROM elasticsearch:2.3.5

RUN /usr/share/elasticsearch/bin/plugin install mobz/elasticsearch-head

EXPOSE 9200

build 自己的镜像

# 进入到此Dockerfile所在的同级目录,然后开始创建镜像

docker build --name "es_test" .

查看创建好的镜像

➜ elasticsearch ls

Dockerfile elasticsearch.yml operate.py

➜ elasticsearch docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

es_test latest 43cf30ef8591 2 days ago 349MB

redis latest 0f55cf3661e9 9 days ago 95MB

logstash 5.5.2 98f8400d2944 17 months ago 724MB

elasticsearch 2.3.5 1c3e7681c53c 2 years ago 346MB

➜ elasticsearch

运行elasticsearch

elasticsearch docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

➜ elasticsearch docker run -d -p 9200:9200 --name="my_elasticsearch" 43cf30ef8591

687bf289bb43d0a037293a2f308b59ce50f5fb475f0b50dd075a0f304be789a3

➜ elasticsearch docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

687bf289bb43 43cf30ef8591 "/docker-entrypoint.…" 18 seconds ago Up 17 seconds 0.0.0.0:9200->9200/tcp, 9300/tcp my_elasticsearch

➜ elasticsearch docker inspect 687bf289bb43 | grep IP

"LinkLocalIPv6Address": "",

"LinkLocalIPv6PrefixLen": 0,

"SecondaryIPAddresses": null,

"SecondaryIPv6Addresses": null,

"GlobalIPv6Address": "",

"GlobalIPv6PrefixLen": 0,

"IPAddress": "172.17.0.2",

"IPPrefixLen": 16,

"IPv6Gateway": "",

"IPAMConfig": null,

"IPAddress": "172.17.0.2",

"IPPrefixLen": 16,

"IPv6Gateway": "",

"GlobalIPv6Address": "",

"GlobalIPv6PrefixLen": 0,

➜ elasticsearch

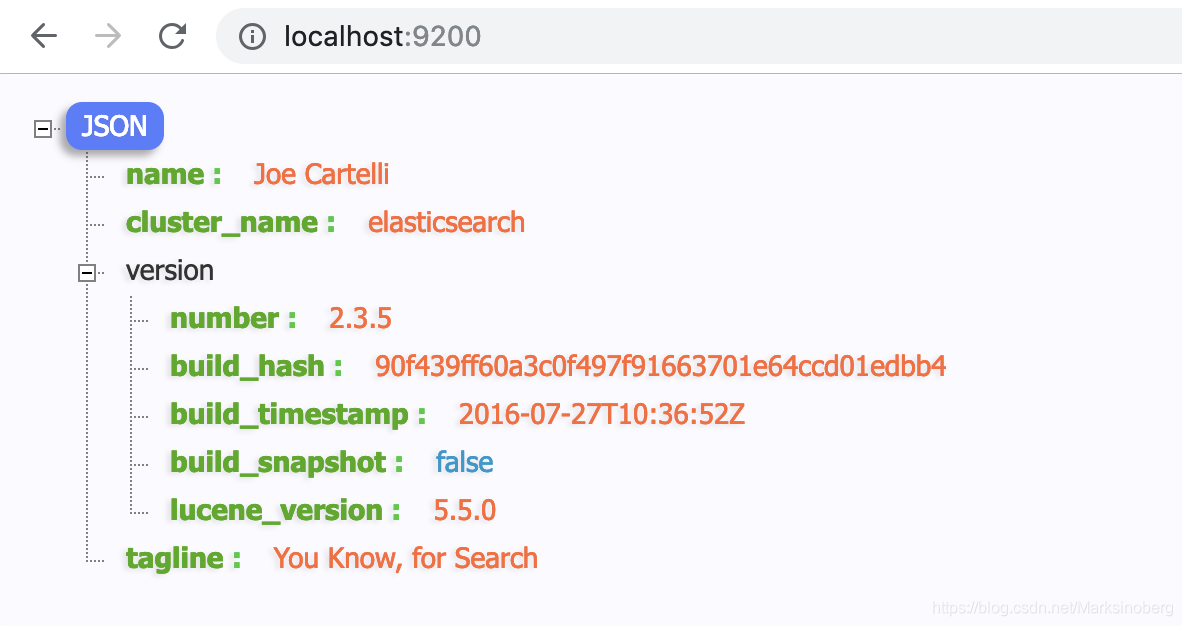

docker的run命令中通过-p参数指定了宿主机端口9200 与容器的9200端口互通,所以我们就可以在宿主机的9200端口查看到具体的运行信息。

因为在镜像中已经安装好了elasticsearch的head插件,下面访问head下的elasticsearch的运行情况吧。

在elasticsearch中查看logstash中的数据

在开始查看之前,我们现在刚才logstash配置的终端中输入一些数据,作为数据源的积累。

然后我们去elasticsearch中查看是否有对应的数据。

至此,elasticsearch服务也算是搭建完成了。

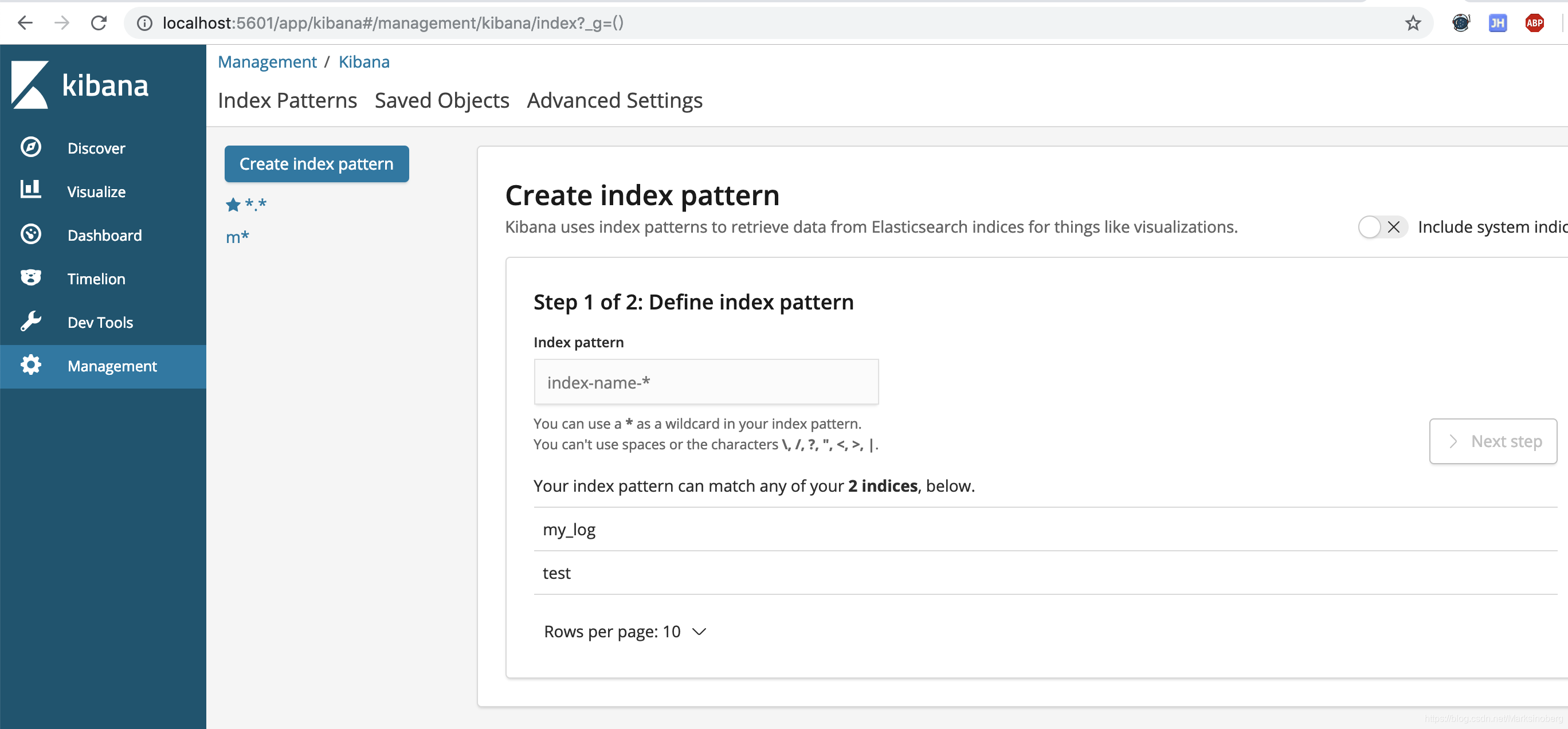

搭建kibana服务

docker search kibana

docker pull nshou/elasticsearch-kibana

Using default tag: latest

latest: Pulling from nshou/elasticsearch-kibana

8e3ba11ec2a2: Pull complete

311ad0da4533: Pull complete

391a6a6b3651: Pull complete

b80b8b42a95a: Pull complete

0ae9073eaa12: Pull complete

Digest: sha256:e504d283be8cd13f9e1d1ced9a67a140b40a10f5b39f4dde4010dbebcbdd6da0

Status: Downloaded newer image for nshou/elasticsearch-kibana:latest

开始运行

➜ kibana docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

es_test latest 43cf30ef8591 2 days ago 349MB

redis latest 0f55cf3661e9 9 days ago 95MB

nshou/elasticsearch-kibana latest 1509f8ccdbf3 2 weeks ago 383MB

logstash 5.5.2 98f8400d2944 17 months ago 724MB

elasticsearch 2.3.5 1c3e7681c53c 2 years ago 346MB

➜ kibana docker run --name my_kibana -p 5601:5601 -d -e ELASTICSEARCH_URL=http://172.17.0.2:9200 1509f8ccdbf3

e0e7eab877ea3a1e603efbf041038f7e3c36e2b21e3e1934bf7e7d39606b8bac

➜ kibana docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

e0e7eab877ea 1509f8ccdbf3 "/bin/sh -c 'sh elas…" 4 seconds ago Up 4 seconds 0.0.0.0:5601->5601/tcp, 9200/tcp my_kibana

36134d90b5cd 98f8400d2944 "/docker-entrypoint.…" 33 minutes ago Up 33 minutes priceless_feistel

687bf289bb43 43cf30ef8591 "/docker-entrypoint.…" 37 minutes ago Up 37 minutes 0.0.0.0:9200->9200/tcp, 9300/tcp my_elasticsearch

➜ kibana

注意上方的ELASTICSEARCH_URL是根据之前部署好的elasticsearch的内网IP服务地址,否则可能导致连接不上。

总结

到这里真的很庆幸,docker着实有用啊,虽说也可以在本机直接部署安装,但是那样污染了宿主机不说,还没办法做好很好的隔离效果。

不管我们有什么想法,把服务图画出来,然后就是把一个个容器跑起来,再进行对应的配置部署,一个demo服务体系就可以很轻松的被构建出来。

感谢docker,感恩技术。