上篇:第1章:Hive入门

1、Hive安装地址

1.1 Hive官网地址:

http://hive.apache.org/

1.2 文档查看地址:https://cwiki.apache.org/confluence/display/Hive/GettingStarted

1.3 下载地址:

http://archive.apache.org/dist/hive/

1.4 github地址

https://github.com/apache/hive

2、Hive安装部署

2.1 Hive安装及配置

(1)把apache-hive-1.2.1-bin.tar.gz上传到linux的/opt/software目录下

(2)解压apache-hive-1.2.1-bin.tar.gz到/opt/module/目录下面

[root@hadoop100 software]# tar -zxvf apache-hive-1.2.1-bin.tar.gz -C /opt/software/module/

(3)修改apache-hive-1.2.1-bin.tar.gz的名称为hive

(4)配置/etc/profile文件

[root@hadoop100 software]# vim /etc/profile

#HIVE_HONE

export HIVE_HOME=/opt/software/module/hive-1.2.1

export PATH=$JAVA_HOME/bin:$PATH:$HIVE_HOME/bin

保存退出!

[root@hadoop100 software]# source /etc/profile

2.2 Hadoop集群配置

(1)必须启动hdfs和yarn

[root@hadoop100 hadoop-2.7.2]# sbin/start-dfs.sh

[root@hadoop100 hadoop-2.7.2]# sbin/start-yarn.sh

(2)拷贝复制hive-env.sh.template文件重名hive-env.sh

[root@hadoop100 conf]# cp hive-env.sh.template hive-env.sh

编辑进入

HADOOP_HOME=/opt/hadoop/module/hadoop-2.7.2

export HIVE_CONF_DIR=/opt/software/module/hive-1.2.1/conf

接下来,启动hive

[root@hadoop100 bin]# hive

Logging initialized using configuration in jar:file:/opt/software/module/hive-1.2.1/lib/hive-common-1.2.1.jar!/hive-log4j.properties

启动成功!

2.3 Hive基本操作

(1)启动hive

[root@hadoop100 bin]# hive

Logging initialized using configuration in jar:file:/opt/software/module/hive-1.2.1/lib/hive-common-1.2.1.jar!/hive-log4j.properties

(2)查看数据库

hive> show databases;

OK

default

Time taken: 1.053 seconds, Fetched: 1 row(s)

(3)打开默认数据库

hive> use default;

OK

Time taken: 0.04 seconds

(4)显示default数据库中的表

hive> show tables;

OK

Time taken: 0.057 seconds

(5)创建一张表

hive> create table student(id int, name string);

OK

Time taken: 0.735 seconds

(6)显示数据库中有几张表

hive> show tables;

OK

student

Time taken: 0.025 seconds, Fetched: 1 row(s)

(7)查看表的结构

hive> desc student;

OK

id int

name string

Time taken: 0.516 seconds, Fetched: 2 row(s)

(8)向表中插入数据

hive> insert into student (id,name) values (1,'tom');

出现错误:

FAILED: SemanticException [Error 10293]: Unable to create temp file for insert values File /tmp/hive/root/2f5b7b9e-08b4-405a-ad42-05bc4722c13c/_tmp_space.db/Values__Tmp__Table__4/data_file could only be replicated to 0 nodes instead of minReplication (=1). There are 0 datanode(s) running and no node(s) are excluded in this operation.

at org.apache.hadoop.hdfs.server.blockmanagement.BlockManager.chooseTarget4NewBlock(BlockManager.java:1547)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.getNewBlockTargets(FSNamesystem.java:3107)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.getAdditionalBlock(FSNamesystem.java:3031)

at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.addBlock(NameNodeRpcServer.java:724)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolServerSideTranslatorPB.addBlock(ClientNamenodeProtocolServerSideTranslatorPB.java:492)

at org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos$ClientNamenodeProtocol$2.callBlockingMethod(ClientNamenodeProtocolProtos.java)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:616)

at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:969)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2049)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2045)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1657)

at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2043)

问题出现在:全为0,因为权限问题没有正常启动datanode

需要启动datanode,启动之后再查看:

[root@hadoop101 hadoop-2.7.2]# jps

13445 SecondaryNameNode

13288 DataNode

13882 NodeManager

13596 ResourceManager

14508 Jps

13165 NameNode

14078 RunJar

[root@hadoop101 hadoop-2.7.2]#

插入数据:

hive> insert into student (id,name) values (1,'tom');

Query ID = root_20191229171757_0f564e83-d1f2-45f2-8aa7-df6412462609

Total jobs = 3

Launching Job 1 out of 3

Number of reduce tasks is set to 0 since there's no reduce operator

Starting Job = job_1577638672448_0001, Tracking URL = http://hadoop101:8088/proxy/application_1577638672448_0001/

Kill Command = /usr/local/hadoop/module/hadoop-2.7.2/bin/hadoop job -kill job_1577638672448_0001

Hadoop job information for Stage-1: number of mappers: 1; number of reducers: 0

2019-12-29 17:18:19,060 Stage-1 map = 0%, reduce = 0%

2019-12-29 17:18:30,429 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 2.45 sec

MapReduce Total cumulative CPU time: 2 seconds 450 msec

Ended Job = job_1577638672448_0001

Stage-4 is selected by condition resolver.

Stage-3 is filtered out by condition resolver.

Stage-5 is filtered out by condition resolver.

Moving data to: hdfs://hadoop101:9000/user/hive/warehouse/student/.hive-staging_hive_2019-12-29_17-17-57_089_2845663360627823422-1/-ext-10000

Loading data to table default.student

Table default.student stats: [numFiles=1, numRows=1, totalSize=6, rawDataSize=5]

MapReduce Jobs Launched:

Stage-Stage-1: Map: 1 Cumulative CPU: 2.45 sec HDFS Read: 3555 HDFS Write: 77 SUCCESS

Total MapReduce CPU Time Spent: 2 seconds 450 msec

OK

Time taken: 36.286 seconds

(9)查询表中数据

hive> select * from student;

OK

1 tom

Time taken: 0.323 seconds, Fetched: 1 row(s)

(10)退出hive

hive> quit;

[root@hadoop101 bin]#

2.3 将本地文件导入Hive案例

需求

将本地/opt/module/datas/student.txt这个目录下的数据导入到hive的student(id int, name string)表中。

2.3.1 数据准备

(1)在/usr/local/hadoop/module目录下创建datas

[root@hadoop101 module]# mkdir datas

(1)在/usr/local/hadoop/module/datas目录下创建student.txt文件并添加数据

[root@hadoop101 datas]# touch student.txt

[root@hadoop101 datas]# vi student.txt

1001 zhangshan

1002 lishi

1003 zhaoliu

注意以tab键间隔。

2.3.2 Hive实际操作

(1)启动hive

[root@hadoop101 bin]# hive

(2)显示数据库

hive> show databases;

OK

default

Time taken: 1.036 seconds, Fetched: 1 row(s)

(3)使用default数据库

hive> use default;

OK

Time taken: 0.032 seconds

(4)显示default数据库中的表

hive> show tables;

OK

student

Time taken: 0.069 seconds, Fetched: 1 row(s)

(5)删除已创建的student表

hive> drop table student;

(6)创建student表, 并声明文件分隔符’\t’

hive> create table student(id int, name string) ROW FORMAT DELIMITED FIELDS TERMINATED

BY '\t';

OK

Time taken: 0.343 seconds

(7)加载/usr/local/hadoop/module/datas/student.txt 文件到student数据库表中。

hive> load data local inpath '/usr/local/hadoop/module/datas/student.txt' into table student;

Loading data to table default.student

Table default.student stats: [numFiles=1, totalSize=39]

OK

Time taken: 0.779 seconds

(8)Hive查询结果

select * from student;

OK

1001 zhangshan

1002 lishi

1003 zhaoliu

Time taken: 0.408 seconds, Fetched: 3 row(s)

注意:想要同时启动两个或两个以上的hive服务窗口,在本台的虚拟机必须需要安装mysql服务,否则,当开启第二个hive服务窗口就会报错!

由于自己的虚拟机是安装了mysql服务的所以启动二个hive服务窗口就不会报错,所以忽略过了!

配置只要是root用户+密码,在任何主机上都能登录MySQL数据库。基本步骤如下:

1.进入mysql,密码:123456

[root@hadoop101 mysql]# mysql -uroot -p

Enter password:

2.显示数据库

mysql> show databases;

+--------------------+

| Database |

+--------------------+

| information_schema |

| jdbc |

| mybatis |

| mysql |

| performance_schema |

| sys |

+--------------------+

6 rows in set (0.03 sec)

3.使用mysql数据库

mysql> use mysql;

Database changed

4.展示mysql数据库中的所有表

mysql> desc user;

+------------------------+-----------------------------------+------+-----+-----------------------+-------+

| Field | Type | Null | Key | Default | Extra |

+------------------------+-----------------------------------+------+-----+-----------------------+-------+

| Host | char(60) | NO | PRI | | |

| User | char(32) | NO | PRI | | |

| Select_priv | enum('N','Y') | NO | | N | |

| Insert_priv | enum('N','Y') | NO | | N | |

| Update_priv | enum('N','Y') | NO | | N | |

| Delete_priv | enum('N','Y') | NO | | N | |

| Create_priv | enum('N','Y') | NO | | N | |

| Drop_priv | enum('N','Y') | NO | | N | |

| Reload_priv | enum('N','Y') | NO | | N | |

| Shutdown_priv | enum('N','Y') | NO | | N | |

| Process_priv | enum('N','Y') | NO | | N | |

| File_priv | enum('N','Y') | NO | | N | |

| Grant_priv | enum('N','Y') | NO | | N | |

| References_priv | enum('N','Y') | NO | | N | |

| Index_priv | enum('N','Y') | NO | | N | |

| Alter_priv | enum('N','Y') | NO | | N | |

| Show_db_priv | enum('N','Y') | NO | | N | |

| Super_priv | enum('N','Y') | NO | | N | |

| Create_tmp_table_priv | enum('N','Y') | NO | | N | |

| Lock_tables_priv | enum('N','Y') | NO | | N | |

| Execute_priv | enum('N','Y') | NO | | N | |

| Repl_slave_priv | enum('N','Y') | NO | | N | |

| Repl_client_priv | enum('N','Y') | NO | | N | |

| Create_view_priv | enum('N','Y') | NO | | N | |

| Show_view_priv | enum('N','Y') | NO | | N | |

| Create_routine_priv | enum('N','Y') | NO | | N | |

| Alter_routine_priv | enum('N','Y') | NO | | N | |

| Create_user_priv | enum('N','Y') | NO | | N | |

| Event_priv | enum('N','Y') | NO | | N | |

| Trigger_priv | enum('N','Y') | NO | | N | |

| Create_tablespace_priv | enum('N','Y') | NO | | N | |

| ssl_type | enum('','ANY','X509','SPECIFIED') | NO | | | |

| ssl_cipher | blob | NO | | NULL | |

| x509_issuer | blob | NO | | NULL | |

| x509_subject | blob | NO | | NULL | |

| max_questions | int(11) unsigned | NO | | 0 | |

| max_updates | int(11) unsigned | NO | | 0 | |

| max_connections | int(11) unsigned | NO | | 0 | |

| max_user_connections | int(11) unsigned | NO | | 0 | |

| plugin | char(64) | NO | | mysql_native_password | |

| authentication_string | text | YES | | NULL | |

| password_expired | enum('N','Y') | NO | | N | |

| password_last_changed | timestamp | YES | | NULL | |

| password_lifetime | smallint(5) unsigned | YES | | NULL | |

| account_locked | enum('N','Y') | NO | | N | |

+------------------------+-----------------------------------+------+-----+-----------------------+-------+

45 rows in set (0.01 sec)

6.查询user表

mysql> select host,user,authentication_string from user;

+-----------+---------------+-------------------------------------------+

| host | user | authentication_string |

+-----------+---------------+-------------------------------------------+

| localhost | root | *6BB4837EB74329105EE4568DDA7DC67ED2CA2AD9 |

| localhost | mysql.session | *THISISNOTAVALIDPASSWORDTHATCANBEUSEDHERE |

| localhost | mysql.sys | *THISISNOTAVALIDPASSWORDTHATCANBEUSEDHERE |

| % | root | *81F5E21E35407D884A6CD4A731AEBFB6AF209E1B |

+-----------+---------------+-------------------------------------------+

4 rows in set (0.01 sec)

7、 刷新

mysql>flush privileges;

Query OK, 0 rows affected (0.00 sec)

8、退出

mysql>quit;

3、配置Metastore到MySql

3.1 在/usr/local/hadoop/module/hive-1.2.1/conf/目录下创建一个hive-site.xml

[root@hadoop101 ~]# cd /usr/local/hadoop/module/hive-1.2.1/conf/

[root@hadoop101 conf]# touch hive-site.xml

[root@hadoop101 conf]# vi hive-site.xml

3.2 根据官方文档配置参数,拷贝数据到hive-site.xml文件中

https://cwiki.apache.org/confluence/display/Hive/AdminManual+MetastoreAdmin

<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://localhost:3306/metastore?createDatabaseIfNotExist=true</value>

<description>JDBC connect string for a JDBC metastore</description>

</property>

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.jdbc.Driver</value>

<description>Driver class name for a JDBC metastore</description>

</property>

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>root</value>

<description>username to use against metastore database</description>

</property>

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>123456</value>

<description>password to use against metastore database</description>

</property>

</configuration>

3.3.1 在/user/local/java/mysql的目录下创建my-libs文件夹

[root@hadoop101 mysql]# mkdir my-libs

3.3.2 把java驱动包:mysql-connector-java-5.1.27.tar.gz上传到 /user/local/java/mysql/my-libs目录下,并进行解压

[root@hadoop101 mysql]# tar -zxvf mysql-connector-java-5.1.27.tar.gz -C /usr/local/java/mysql/my-libs/

[root@hadoop101 mysql-connector-java-5.1.27]# ll

total 1264

-rw-r--r-- 1 root root 47173 Oct 23 2013 build.xml

-rw-r--r-- 1 root root 222520 Oct 23 2013 CHANGES

-rw-r--r-- 1 root root 18122 Oct 23 2013 COPYING

drwxr-xr-x 2 root root 71 Dec 29 19:14 docs

-rw-r--r-- 1 root root 872303 Oct 23 2013 mysql-connector-java-5.1.27-bin.jar

-rw-r--r-- 1 root root 61423 Oct 23 2013 README

-rw-r--r-- 1 root root 63674 Oct 23 2013 README.txt

drwxr-xr-x 7 root root 67 Oct 23 2013 src

[root@hadoop101 mysql-connector-java-5.1.27]#

3.3.2 把解压包下的:mysql-connector-java-5.1.27-bin.jar文件拷贝到/usr/local/hadoop/module/hive-1.2.1/lib目录下

[root@hadoop101 mysql-connector-java-5.1.27]# cp mysql-connector-java-5.1.27-bin.jar /usr/local/hadoop/module/hive-1.2.1/lib/

3.3.3 由于修改过配置文件,退出重新进入

注意:

先启动mysql服务

[root@hadoop101 mysql]# mysql -uroot -p

Enter password:

显示数据库

mysql> show databases;

+--------------------+

| Database |

+--------------------+

| information_schema |

| jdbc |

| mybatis |

| mysql |

| performance_schema |

| sys |

+--------------------+

6 rows in set (0.00 sec)

再次启动hive服务

[root@hadoop101 hive-1.2.1]# bin/hive

Logging initialized using configuration in jar:file:/usr/local/hadoop/module/hive-1.2.1/lib/hive-common-1.2.1.jar!/hive-log4j.properties

hive>

接着,我们在mysql服务显示当前数据库,多了一个metastore

mysql> show databases;

+--------------------+

| Database |

+--------------------+

| information_schema |

| jdbc |

| metastore |

| mybatis |

| mysql |

| performance_schema |

| sys |

+--------------------+

7 rows in set (0.00 sec)

查看metastore数据库的内容

mysql> use metastore;

Reading table information for completion of table and column names

You can turn off this feature to get a quicker startup with -A

Database changed

显示数据库的表

mysql> show tables;

+---------------------------+

| Tables_in_metastore |

+---------------------------+

| BUCKETING_COLS |

| CDS |

| COLUMNS_V2 |

| DATABASE_PARAMS |

| DBS |

| FUNCS |

| FUNC_RU |

| GLOBAL_PRIVS |

| PARTITIONS |

| PARTITION_KEYS |

| PARTITION_KEY_VALS |

| PARTITION_PARAMS |

| PART_COL_STATS |

| ROLES |

| SDS |

| SD_PARAMS |

| SEQUENCE_TABLE |

| SERDES |

| SERDE_PARAMS |

| SKEWED_COL_NAMES |

| SKEWED_COL_VALUE_LOC_MAP |

| SKEWED_STRING_LIST |

| SKEWED_STRING_LIST_VALUES |

| SKEWED_VALUES |

| SORT_COLS |

| TABLE_PARAMS |

| TAB_COL_STATS |

| TBLS |

| VERSION |

+---------------------------+

29 rows in set (0.00 sec)

开第二个hive,启动hive服务

[root@hadoop101 bin]# hive

Logging initialized using configuration in jar:file:/usr/local/hadoop/module/hive-1.2.1/lib/hive-common-1.2.1.jar!/hive-log4j.properties

hive>

接着,显示当前数据库

hive> show databases;

OK

default

Time taken: 1.209 seconds, Fetched: 1 row(s)

hive>

4、Hive常用交互命令

4.1 Hive帮助命令:

[root@hadoop101 hive-1.2.1]# bin/hive -help

usage: hive

-d,--define <key=value> Variable subsitution to apply to hive

commands. e.g. -d A=B or --define A=B

--database <databasename> Specify the database to use

-e <quoted-query-string> SQL from command line

-f <filename> SQL from files

-H,--help Print help information

--hiveconf <property=value> Use value for given property

--hivevar <key=value> Variable subsitution to apply to hive

commands. e.g. --hivevar A=B

-i <filename> Initialization SQL file

-S,--silent Silent mode in interactive shell

-v,--verbose Verbose mode (echo executed SQL to the

console)

[root@hadoop101 hive-1.2.1]#

启动hive服务

[root@hadoop101 hive-1.2.1]# bin/hive

Logging initialized using configuration in jar:file:/usr/local/hadoop/module/hive-1.2.1/lib/hive-common-1.2.1.jar!/hive-log4j.properties

显示当前数据库与数据表

hive> show databases;

OK

default

Time taken: 1.006 seconds, Fetched: 1 row(s)

hive>

hive> show tables;

OK

Time taken: 0.069 seconds

hive>

创建一张student数据表

hive> create table student(id int,name string,age int)ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t' ;

OK

Time taken: 0.137 seconds

hive>

查询student数据表

hive> select * from student;

OK

1001 zhangshan NULL

1002 lishi NULL

1003 zhaoliu NULL

Time taken: 0.14 seconds, Fetched: 3 row(s)

hive>

注意:如元数据有的数据的信息,会直接加载进去

接下来,做常用交互命令

1.“-e”不进入hive的交互窗口执行sql语句

[root@hadoop101 hive-1.2.1]# bin/hive -e "select id from student;"

Logging initialized using configuration in jar:file:/usr/local/hadoop/module/hive-1.2.1/lib/hive-common-1.2.1.jar!/hive-log4j.properties

OK

1001

1002

1003

Time taken: 2.416 seconds, Fetched: 3 row(s)

[root@hadoop101 hive-1.2.1]#

2.“-f”执行脚本中sql语句

(1)在/usr/local/hadoop/module/datas目录下创建hivef.sql文件

[root@hadoop101 datas]# touch hivef.sql

[root@hadoop101 datas]# vim hivef.sql

select * from student;

(2)执行文件中的sql语句

[root@hadoop101 hive-1.2.1]# bin/hive -f /usr/local/hadoop/module/datas/hivef.sql

OK

1001 zhangshan NULL

1002 lishi NULL

1003 zhaoliu NULL

Time taken: 2.598 seconds, Fetched: 3 row(s)

[root@hadoop101 hive-1.2.1]#

(3)执行文件中的sql语句并将结果写入文件中

[root@hadoop101 hive-1.2.1]# bin/hive -f /usr/local/hadoop/module/datas/hivef.sql > /usr/local/hadoop/module/hive_result.txt

Logging initialized using configuration in jar:file:/usr/local/hadoop/module/hive-1.2.1/lib/hive-common-1.2.1.jar!/hive-log4j.properties

OK

Time taken: 2.452 seconds, Fetched: 3 row(s)

[root@hadoop101 hive-1.2.1]#

查看追加hive_result.txt文件信息

[root@hadoop101 module]# cat hive_result.txt

1001 zhangshan NULL

1002 lishi NULL

1003 zhaoliu NULL

[root@hadoop101 module]#

5、Hive其他命令操作

5.1 退出hive窗口:

hive(default)>exit;

hive(default)>quit;

在新版的hive中没区别了,在以前的版本是有的:

exit:先隐性提交数据,再退出;

quit:不提交数据,退出;

5.2 在hive cli命令窗口中如何查看hdfs文件系统

hive> dfs -ls /;

Found 2 items

drwx-wx-wx - root supergroup 0 2019-12-29 17:17 /tmp

drwxr-xr-x - root supergroup 0 2019-12-29 17:17 /user

hive>

5.3 在hive cli命令窗口中如何查看本地文件系统

hive> ! ls /usr/local/hadoop/module/datas;

hivef.sql

hive_result.txt

student.txt

5.3 查看在hive中输入的所有历史命令

[root@hadoop101 ~]# cat .hivehistory

show databases;

use default;

show tables;

create table student(id int, name string);

show tables;

desc student;

insert into student (id,name) values (1,'tom');

select * from student;

quit;

exit

show databases;

exit;

show databases;

show tables;

create table student(id int,name strung)row format delimited fields terminated by '\t'

show databases;

show tables;

create table student(id int,name strung)row format delimited fields terminated by '\t';

create table sqoop_test(NoViableAltException(26@[])

create table sqoop_test(id int,name string,age int)ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t' ;

create table hive_test(id int,name string,age int)ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t' ;

select * from hive_test;

create table student(id int,name string,age int)ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t' ;

select * from student;

exit;

6、Hive常见属性配置

6.1 Hive数据仓库位置配置

1)Default数据仓库的最原始位置是在hdfs上的/usr/local/hadoop/module/hive-1.2.1/conf

2)在仓库目录下,没有对默认的数据库default创建文件夹。如果某张表属于default数据库,直接在数据仓库目录下创建一个文件夹。

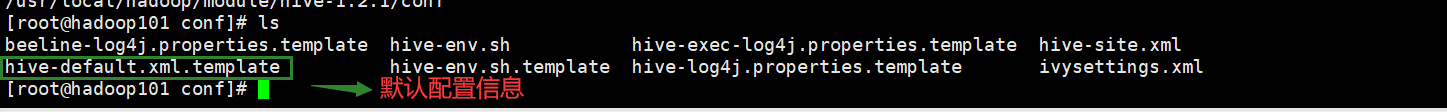

3)修改default数据仓库原始位置(将hive-default.xml.template如下配置信息拷贝到hive-site.xml文件中)。

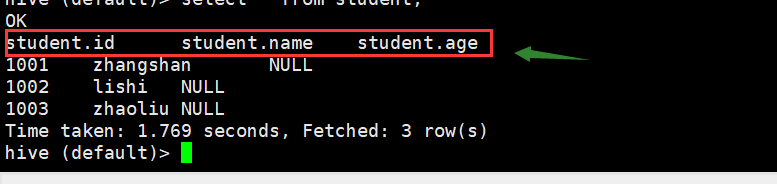

<property>

<name>hive.cli.print.header</name>

<value>true</value>

</property>

<property>

<name>hive.cli.print.current.db</name>

<value>true</value>

</property>

配置完hive-site.xml文件之后,退出重新启动hive服务

hive> exit;

[root@hadoop101 hive-1.2.1]# bin/hive

Logging initialized using configuration in jar:file:/usr/local/hadoop/module/hive-1.2.1/lib/hive-common-1.2.1.jar!/hive-log4j.properties

hive (default)>

接下来,再进行查询student数据表

hive (default)> select * from student;

OK

student.id student.name student.age

1001 zhangshan NULL

1002 lishi NULL

1003 zhaoliu NULL

Time taken: 1.769 seconds, Fetched: 3 row(s)

hive (default)>

从上面得知,数据表的id也同时被查询出来了

在hive的配置文件 hive-default.xml.template ,找到这个参数是指定文件路径的,默认的数据仓库路径如下:

<property>

<name>hive.metastore.warehouse.dir</name>

<value>/user/hive/warehouse</value>

<description>location of default database for the warehouse</description>

</property>

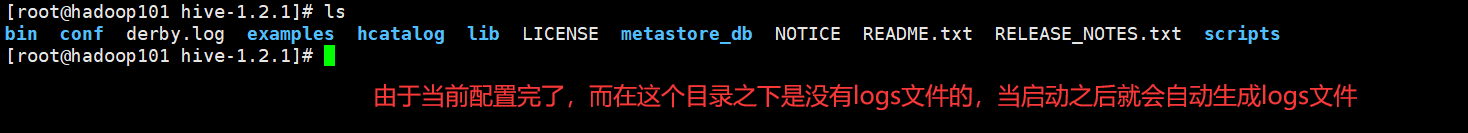

7、Hive运行日志信息配置

7.1 首先,我们进入hive安装目录下的conf文件,找到hive-log4j.properties.template文件,并拷贝一份并重命名为hive-log4j.properties

[root@hadoop101 hive-1.2.1]# cd conf/

[root@hadoop101 conf]#

[root@hadoop101 conf]# cp hive-exec-log4j.properties.template hive-log4j.properties

[root@hadoop101 conf]#

7.2 编辑进入 hive-log4j.properties 文件

hive.log.dir=/usr/local/hadoop/module/hive-1.2.1/logs

保存退出!

注意:

8、参数配置方式

8.1 查看当前所有的配置信息

hive>set;

8.2 参数的配置三种方式

(1)配置文件方式

默认配置文件:==hive-default.xml ==

用户自定义配置文件:hive-site.xml

注意:用户自定义配置会覆盖默认配置。另外,Hive也会读入Hadoop的配置,因为Hive是作为Hadoop的客户端启动的,Hive的配置会覆盖Hadoop的配置。配置文件的设定对本机启动的所有Hive进程都有效。

(2)命令行参数方式

启动Hive时,可以在命令行添加==-hiveconf param=value==来设定参数。

例如:

hive (default)> set mapred.reduce.tasks;

mapred.reduce.tasks=-1

hive (default)>

3)参数声明方式

可以在HQL中使用SET关键字设定参数

例如:

hive (default)> set mapred.reduce.tasks=100;

hive (default)>

注意:仅对本次hive启动有效。

查看参数设置

hive (default)> set mapred.reduce.tasks;

mapred.reduce.tasks=100

hive (default)>

上述三种设定方式的优先级依次递增。即配置文件<命令行参数<参数声明。注意某些系统级的参数,例如log4j相关的设定,必须用前两种方式设定,因为那些参数的读取在会话建立以前已经完成了。

启动配置的参数:

先把原有的hive服务关闭之后,再重新启动

hive (default)> exit;

[root@hadoop101 hive-1.2.1]# bin/hive -hiveconf hive.cli.print.current.db=false

Logging initialized using configuration in file:/usr/local/hadoop/module/hive-1.2.1/conf/hive-log4j.properties

hive>

hive> set hive.cli.print.current.db=true;

hive (default)>

这种启动方式优先级会更高