文章目录

1. Motivation

DETR达到SOTA性能的同时,也需要更长的时间来收敛converge。

DETR is a recently proposed Transformer-based method which views object detection as a set prediction problem and achieves state-of-the-art performance but demands extra-long training time to converge.

基于此,作者来探究DETR训练中优化的困难。Faster RCNN只需要训练30epochs,而DETR需要训练500epochs。

Therefore, in what manner should we accelerate the training process towards fast convergence for DETR-like Transformer-based detectors is a challenging research question and is the main focus of this paper.

Transformer decoder是造成慢收敛的主要问题。在DETR中的匈牙利loss运用的二分图具有不稳定性,也造成了慢收敛。

2. Contribution

作者提出了TSP-FCOS,TSP-RCNN两个模型来解决匈牙利loss以及Transformer cross-attention mechanism中的问题。

To overcome these issues we pro- pose two solutions, namely, TSP-FCOS (Transformer-based Set Prediction with FCOS) and TSP-RCNN (Transformer- based Set Prediction with RCNN).

- 最新的FoI(Feature of Interest)模块应用于TSP-FCOS,来帮助Transformer 编码多尺度的特征。

A novel Feature of Interest (FoI) selection mechanism is developed in TSP-FCOS to help Transformer en- coder handle multi-level features.

- 为了解决匈牙利算法中二分图的不稳定性,作者同样为这2个模型制定了一个新的二分图匹配,在训练中加速收敛。

To resolve the instability of the bipartite matching in the Hungarian loss, we also design a new bipartite matching scheme for each of our two models for accelerating the convergence in training.

3. What Causes the Slow Convergence of DETR?

3.1 Does Instability of the Bipartite Matching Affect Convergence?

作者认为匈牙利算法中进行二分图匹配的不稳定在于以下2点:

- The initialization of the bipartite matching is essentially random;

- The matching instability would be caused by noisy conditions in different training epochs.

作者提出了matching distillation方法用来检测这些因素。首先,作者使用一个预训练的DETR作为teacher model,预训练的DETR预测的matching作为student model的ground truth label assignment,许多随机的模块例如dropout 以及batch normalization都被关闭,确保deterministic。

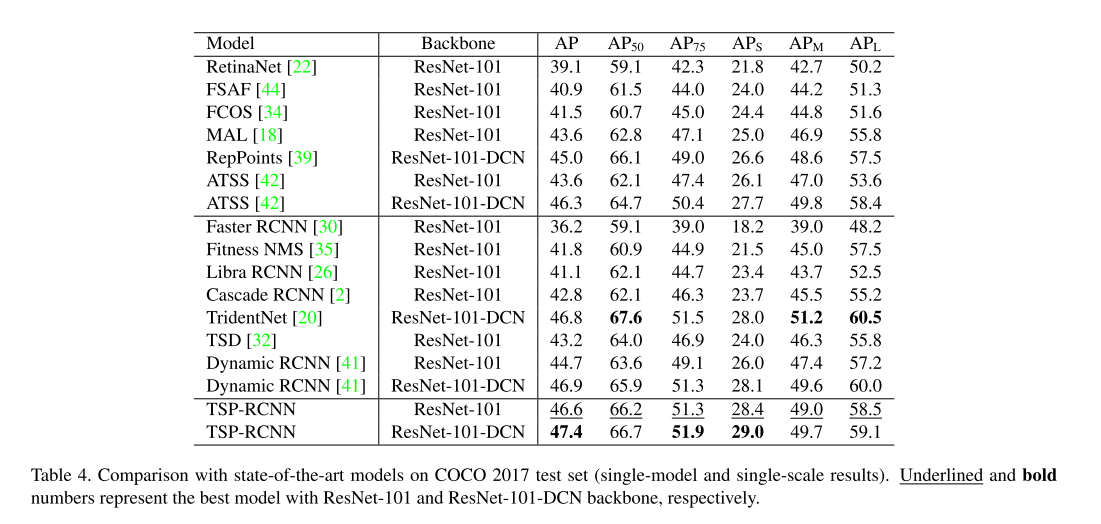

如图1所示,显示了前25个epochs的original DETR和matching distilled DETR的结果,可以得出matching distillation可以最开始的epochs加速收敛,但是15个epochs后就insignificant。

这意味着二分图匹配的不稳定性只导致了部分的慢收敛,特别是在训练的早期阶段,并不是主要的原因。

3.2 Are the Attention Modules the Main Cause?

Transformer attention maps几乎组成了初始化的阶段,但是在训练的过程中逐渐sparse。在BERT中,利用sparser module(卷积操作)取代了部分的attention head,来加速训练。

作者关注于稀疏动态的cross-attention部分,因为cross-attention是一个关键的模块,decoder部分的object queries从encoder中获得到了物体的信息。

Imprecise (under-optimized) cross-attention may not allow the decoder to extract accurate context information from images, and thus results in poor localization especially.

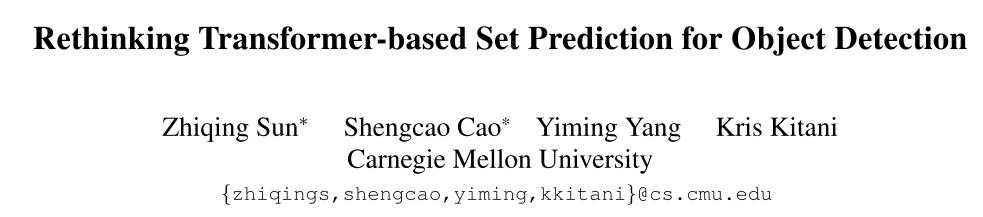

因为attention maps可以被解释为概率分布,因此作者使用negative entropy作为一种稀疏性的直观度量方法。首先,已知一个 n × m n \times m n×m的attention map a a a,通过公式 1 m ∑ j = 1 m P ( a i , j ) l o g P ( a i , j ) \frac{1}{m} \sum_{j=1}^{m}P(a_{i,j})logP(a_{i,j}) m1∑j=1mP(ai,j)logP(ai,j)先计算每一个源位置 i ∈ [ n ] i \in [n] i∈[n]的sparsity, a i , j a_{i,j} ai,j从source position i i i到target position j j j的attention score。 然后作者对于每一层上的所有attention heads上和所有的source postions的稀疏取平均。

如图2所示,作者发现cross-attention的稀疏性统一的增加,并且即使到了100个epochs,还是没有达到plateau(高原)。这意味着DETR中的cross-attention部分对于慢收敛起到更决定性的作用。

3.3 Does DETR Really Need Cross-attention?

Our next question is: Can we remove the cross-attention module from DETR for faster convergence but without sacrificing its prediction power in object detection? We

作者制定了一个只有encoder的DETR版本,并且比较了它和原始DETR的收敛性。

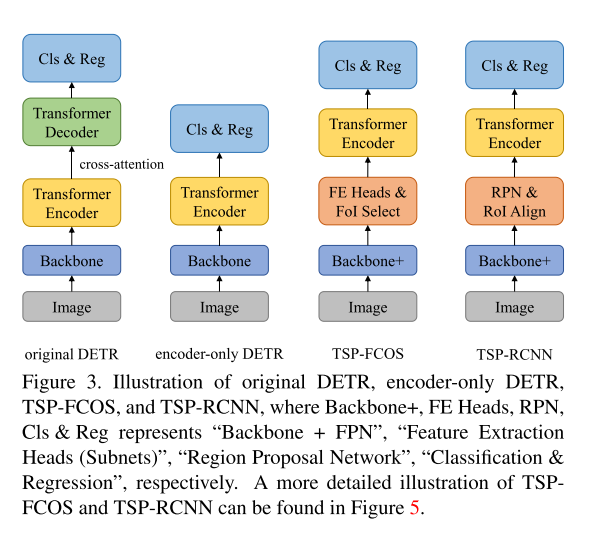

在encoder-only的版本中,作者直接使用encoder的输出部分用于目标检测(category label 和 bounding box),每一个feature喂进检测头用于预测检测的结果。图3比较了原始的DETR和encoder-only DETR以及TSP-FOCS和TSP-RCNN的网络模型。

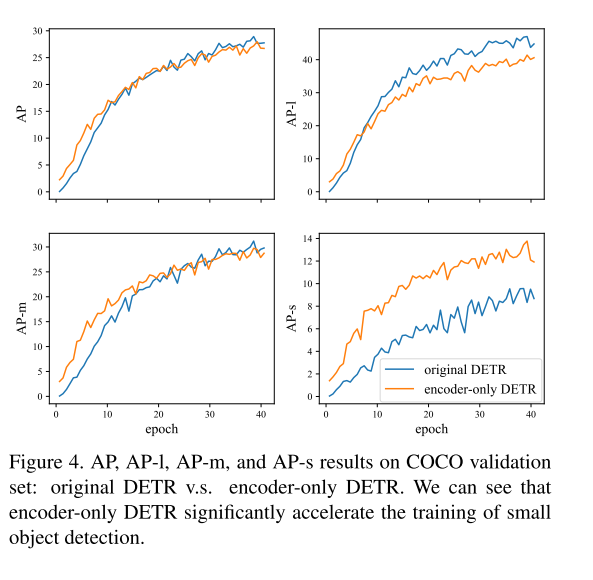

图4表示了原始DETR和encoder-only DETR的AP曲线。第一个图表示2者总体的AP曲线,可以发现两者的性能几乎一样。这表明我们可以去除cross-attentiuon部分,这是一个positive rusult。而且encoder-only DETR对于小物体以及部分的中物体的性能是优于原始DETR,但是在大物体的AP上较低。

A potential interpretation, we think, is that a large object may include too many potentially matchable feature points, which are difficult for the sliding point scheme in the encoder-only DETR to handle.

4. The Proposed Methods

4.1. TSP-FCOS

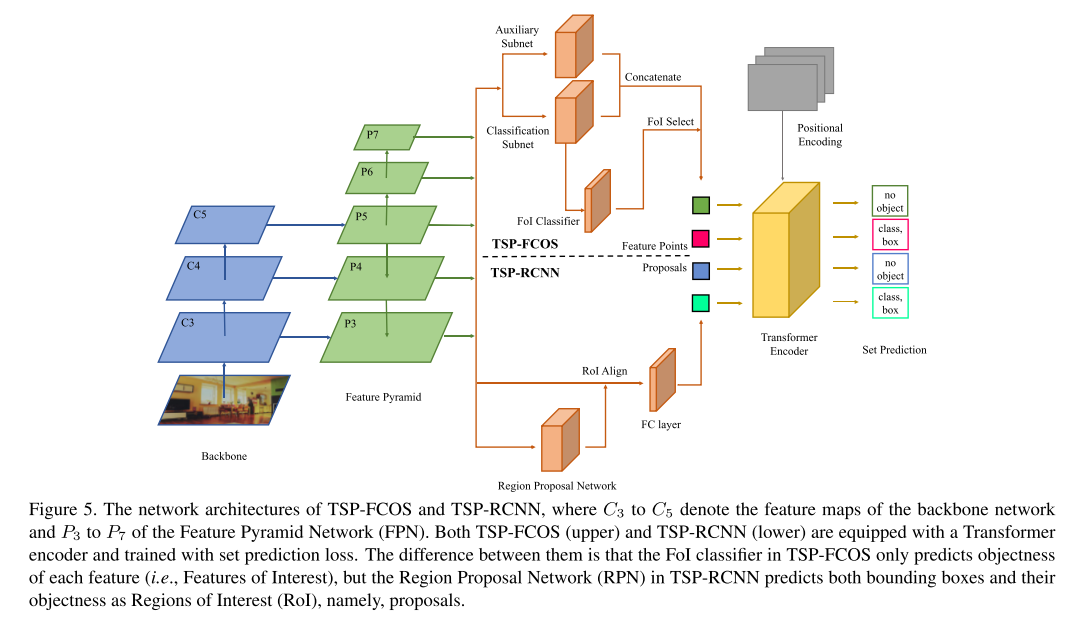

TSP-FCOS结合了FCOS和encoder-only DETR的优点,带有一个新的组成部分,名为feature of Interest(FoI)选择器,FOI可以让transformer encoder来挑选多尺度的特征,还制定一个新的二分图匹配方法。图5上半部分表示了TSP-FCOS的网络架构。

- Backbone and FPN

- Feature extraction subnets

auxiliary subnet (head) 和classification subnet (head).

Their outputs are concatenated and then selected by FoI classifier.

subnet网络结构:

Both classification subnet and auxiliary subnet use four 3×3 convolutional layers with 256 channels and group normalization [37].

- Feature of Interest (FoI) classifier

为了改善self-attention的性能,作者制定了一个binary的分类器来挑选一个限制的features并且将他们作为FOI,二进制FOI分类器通过FCOS的label assignment的方法进行训练。

After FoI classification, top scored features are picked as FoIs and fed into the Transformer encoder.

In FoI selection, we select top 700 scored feature positions from FoI classifier as the input of Transformer encoder.

- Transformer encoder

输入:

After the FoI selection step, the in- put to Transformer encoder is a set of FoIs and their corresponding positional encoding.

输出:

The outputs of the encoder pass through a shared feed forward network, which predicts the category label (including “no object”) and bounding box for each FoI.

- Positional encoding

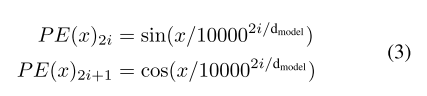

位置编码定义为 [ P E ( x ) : P E ( y ) ] [PE(x):PE(y)] [PE(x):PE(y)],[:]表示concaternation,PE被定义为公式3,其中dmodel为FoI的维度:

-

Faster set prediction training

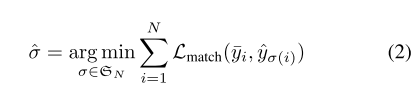

由FCOS可知,一个特征点会被制定为gt object的条件是他在这个bbox内部,并且在适合的FPN level中。接着,用于优化检测结果和gt objects的matching,即更严格的cost-based matching(公式2),会被用于公式1(匈牙利loss)。

a feature point can be assigned to a ground-truth object only when the point is in the bounding box of the object and in the proper feature pyramid level.

4.2 TSP-RCNN

which requires more computational resources but can detect objects more accurately.

- Region proposal network

we follow the design of Faster RCNN [30] and use a Region Proposal Network (RPN) to get a set of Regions of Interest (RoIs) to be further refined.

不同于FoIs,每一个RoI不仅仅包含了objectness score,还包含了一个预测的bbox,作者使用RoIAlign来 从多尺度的特征图中提取RoIs的信息。图区的特征接下来被flattened并且送入全连接网络来形成Transformer encoder的输入。

- Positional encoding

( c x , c y , w , h ) (cx,cy,w,h) (cx,cy,w,h)来定义RoI proposal的位置信息。 ( c x , c y ) ∈ [ 0 , 1 ] 2 (cx,cy) \in [0, 1]^2 (cx,cy)∈[0,1]2表示归一化后的中心坐标, ( w , h ) ∈ [ 0 , 1 ] 2 (w,h) \in [0,1]^2 (w,h)∈[0,1]2表示归一化后的高度和宽度。作者使用 [ P E ( c x ) : P E ( c y ) : P E ( w ) : P E ( h ) ] [PE(cx):PE(cy):PE(w):PE(h)] [PE(cx):PE(cy):PE(w):PE(h)]来作为proposal的位置编码。

-

Faster set prediction training

与TSP-FCOS不同的是,作者使用了Faster RCNN中的gt label assignment方法,来用于更快的set prediction的训练。

specifically, a proposal can be assigned to a ground-truth object if and only if the intersection-over-union (IoU) score between their bounding boxes is greater than 0.5.

5. Experiments

Ablation study

- Compatibility with deformable convolutions

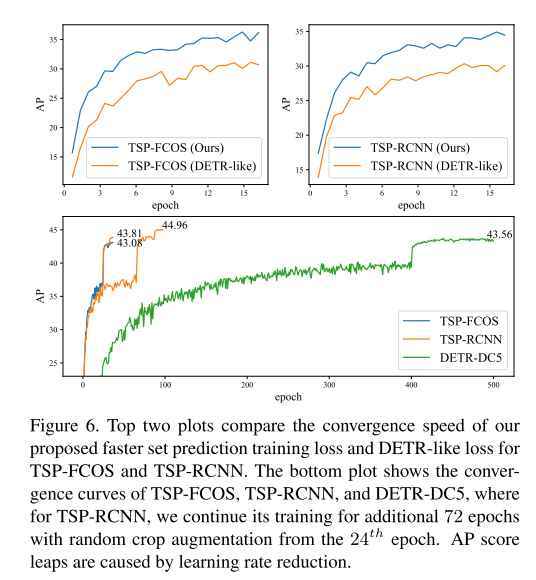

- Analysis of convergence

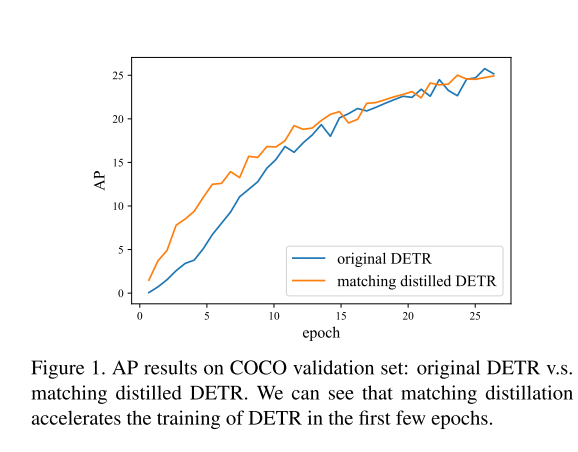

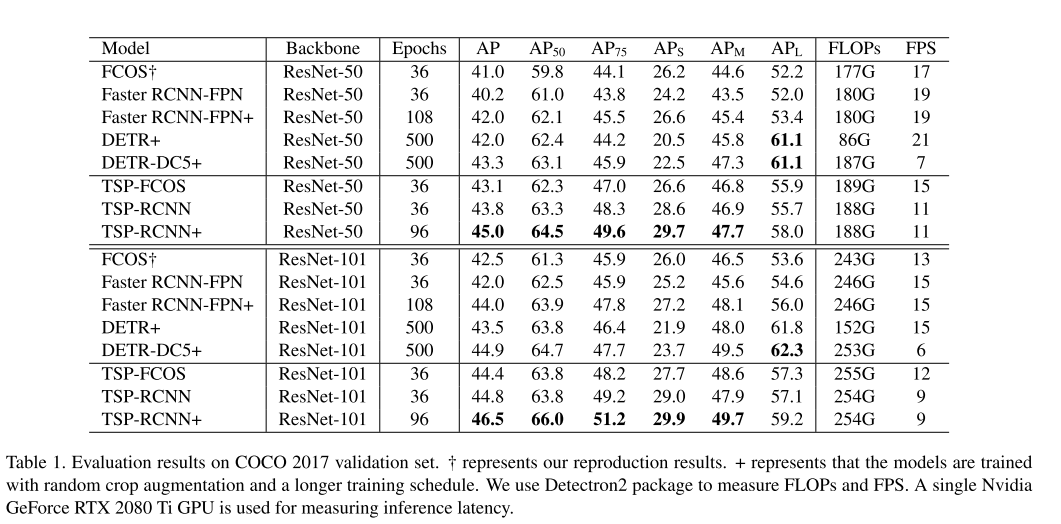

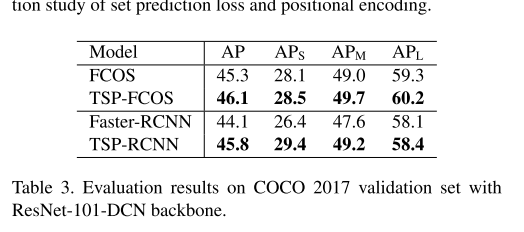

- Comparison with State-of-the-Arts