要点如下:

- 脚本之间调用关系

- 脚本的具体内容

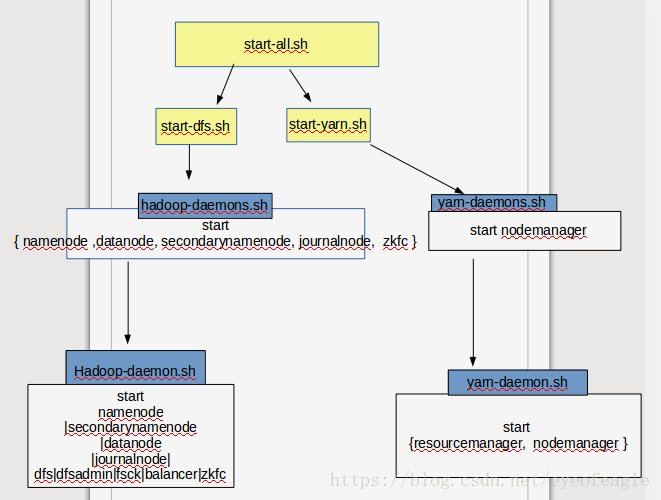

hadoop启动脚本之间的调用关系:

脚本的具体内容(简化后)

1,start- all.sh

# start hdfs daemons if hdfs is present

"${HADOOP_HDFS_HOME}"/sbin/start-dfs.sh --config $HADOOP_CONF_DIR

# start yarn daemons if yarn is present

"${HADOOP_YARN_HOME}"/sbin/start-yarn.sh --config $HADOOP_CONF_DIR

2, start -dfs.sh (调用 hadoop-daemons.sh)

# namenodes

"$HADOOP_PREFIX/sbin/hadoop-daemons.sh" --script "$bin/hdfs" start namenode

#---------------------------------------------------------

# datanodes (using default slaves file)

"$HADOOP_PREFIX/sbin/hadoop-daemons.sh" --script "$bin/hdfs" start datanode

#---------------------------------------------------------

# secondary namenodes (if any)

"$HADOOP_PREFIX/sbin/hadoop-daemons.sh" \

--script "$bin/hdfs" start secondarynamenode

#---------------------------------------------------------

# quorumjournal nodes (if any)

case "$SHARED_EDITS_DIR" in

qjournal://*)

"$HADOOP_PREFIX/sbin/hadoop-daemons.sh" \

--hostnames "$JOURNAL_NODES" \

--script "$bin/hdfs" start journalnode ;;

esac

#---------------------------------------------------------

# ZK Failover controllers, if auto-HA is enabled

"$HADOOP_PREFIX/sbin/hadoop-daemons.sh" \

--hostnames "$NAMENODES" \

--script "$bin/hdfs" start zkfc

#---------------------------------------------------------2--1, hadoop-daemons.sh

exec "$bin/slaves.sh" "$bin/hadoop-daemon.sh" "$@"2--1---1, slaves.sh

SLAVE_FILE=${HADOOP_SLAVES:-${HADOOP_CONF_DIR}/slaves}

SLAVE_NAMES=$(cat "$SLAVE_FILE" | sed 's/#.*$//;/^$/d')

# start the daemons

for slave in $SLAVE_NAMES ; do

ssh $slave $"${@// /\\ }" 2>&1 | sed "s/^/$slave: /" &

done2--1---2, hadoop-daemon.sh

startStop=$1

shift

command=$1

case $startStop in

(start)

case $command in

namenode|secondarynamenode|datanode|journalnode|dfs|dfsadmin|fsck|balancer|zkfc)

$HADOOP_HDFS_HOME"/bin/hdfs $command "$@"

;;

esac

echo $! > $pid

;;

(stop)

TARGET_PID=`cat $pid`

if kill -0 $TARGET_PID > /dev/null 2>&1; then

kill $TARGET_PID

if kill -0 $TARGET_PID > /dev/null 2>&1; then

kill -9 $TARGET_PID

fi

fi

rm -f $pid

;;2--1--2--1, bin/hdfs

if [ "$COMMAND" = "namenode" ] ; then

CLASS='org.apache.hadoop.hdfs.server.namenode.NameNode'

HADOOP_OPTS="$HADOOP_OPTS $HADOOP_NAMENODE_OPTS"

elif [ "$COMMAND" = "datanode" ] ; then

CLASS='org.apache.hadoop.hdfs.server.datanode.DataNode'

if [ "$starting_secure_dn" = "true" ]; then

HADOOP_OPTS="$HADOOP_OPTS -jvm server $HADOOP_DATANODE_OPTS"

else

HADOOP_OPTS="$HADOOP_OPTS -server $HADOOP_DATANODE_OPTS"

fi

....

exec "$JAVA" -Dproc_$COMMAND $HADOOP_OPTS $CLASS "$@"3,start -yarn.sh

# start resourceManager

"$bin"/yarn-daemon.sh start resourcemanager

# start nodeManager

"$bin"/yarn-daemons.sh start nodemanager3---1, yarn-daemons.sh

exec "$bin/slaves.sh"\; "$bin/yarn-daemon.sh" "$@"3---1---1, yarn-daemon.sh

startStop=$1

shift

command=$1

shift

case $startStop in

(start)

if [ "$YARN_MASTER" != "" ]; then

rsync -a -e ssh --delete --exclude=.svn $YARN_MASTER/ "$HADOOP_YARN_HOME"

fi

cd "$HADOOP_YARN_HOME"

nohup nice -n $YARN_NICENESS "$HADOOP_YARN_HOME"/bin/yarn $command "$@" > "$log" 2>&1 < /dev/null &

echo $! > $pid

# capture the ulimit output

ulimit -a >> $log 2>&1

;;

.....3---1---1--1, bin/yarn

COMMAND=$1

shift

if [ -d "$HADOOP_YARN_HOME/yarn-common/target/classes" ]; then

CLASSPATH=${CLASSPATH}:$HADOOP_YARN_HOME/yarn-common/target/classes

fi

if [ -d "$HADOOP_YARN_HOME/yarn-mapreduce/target/classes" ]; then

CLASSPATH=${CLASSPATH}:$HADOOP_YARN_HOME/yarn-mapreduce/target/classes

fi

if [ -d "$HADOOP_YARN_HOME/yarn-server/yarn-server-nodemanager/target/classes" ]; then

CLASSPATH=${CLASSPATH}:$HADOOP_YARN_HOME/yarn-server/yarn-server-nodemanager/target/classes

fi

.....

elif [ "$COMMAND" = "resourcemanager" ] ; then

CLASSPATH=${CLASSPATH}:$YARN_CONF_DIR/rm-config/log4j.properties

CLASS='org.apache.hadoop.yarn.server.resourcemanager.ResourceManager'

YARN_OPTS="$YARN_OPTS $YARN_RESOURCEMANAGER_OPTS"

elif [ "$COMMAND" = "nodemanager" ] ; then

CLASSPATH=${CLASSPATH}:$YARN_CONF_DIR/nm-config/log4j.properties

CLASS='org.apache.hadoop.yarn.server.nodemanager.NodeManager'

YARN_OPTS="$YARN_OPTS -server $YARN_NODEMANAGER_OPTS"

if [ "$YARN_NODEMANAGER_HEAPSIZE" != "" ]; then

JAVA_HEAP_MAX="-Xmx""$YARN_NODEMANAGER_HEAPSIZE""m"

fi

....

exec "$JAVA" -Dproc_$COMMAND $JAVA_HEAP_MAX $YARN_OPTS -classpath "$CLASSPATH" $CLASS "$@"