1、案例内容简介

易车网奥迪汽车信息的爬取

内容步骤:

爬取,解析(动态requests+ajax/selenium),清洗,持久化(mysql),可视化(seaborn)

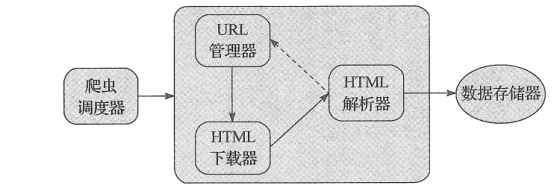

2、案例分析与设计

(1) 系统框架

整个框架分为六个模块:爬虫调度器、URL管理器、HTML下载器、HTML解析器、数据存储器、数据可视化

(2) 数据库设计

用于记录奥迪汽车信息

表ad_data

| id |

Int |

自增主键 |

| name |

Varchar(255) |

汽车名称 |

| titleImgURl |

Varchar(255) |

镖旗图片 |

| referencePrice |

Varchar(255) |

参考价 |

| guidePrice |

Varchar(255) |

厂商价 |

| Displacement |

Varchar(255) |

排量 |

| OilConsumption |

Varchar(255) |

油耗 |

3、案例代码实现

(1) 爬虫调度器模块

主要负责其他模块的协调工作

文件相对地址(文件名):奥迪/SpiderMan.py

#coding:utf-8 from DataOutput import DataOutput from UrlManager import UrlManager from HtmlParser import HtmlParser from SeabornShow import SeabornShow from HtmlDownloader import HtmlDownloader import seaborn as sns class SpiderMan(object): def __init__(self): self.manager = UrlManager() self.downloader = HtmlDownloader() self.parser = HtmlParser() self.output = DataOutput() self.show = SeabornShow() def crawl(self,root_url): self.manager.add_new_url(root_url) while (self.manager.has_new_url() and self.manager.old_url_size() < 100): try: new_url = self.manager.get_new_url() print("》》开始下载页面内容") html = self.downloader.download(new_url) print("》》开始接解析下载的页面") new_urls,data = self.parser.parser(new_url,html) self.output.store_data(data) except: print("crawl failed") # self.output.output_html() print("》》对解析的数据进行mysql数据库持久化操作") self.output.output_mysql() # 数据帧格式数据 df = self.output.mysql_to_pandas() print("》》散点图展示奥迪油耗跟价格的关系") self.show.show(df) if __name__ == "__main__": spider_man = SpiderMan() aodi = "http://car.bitauto.com/tree_chexing/mb_9/" # 奥迪列表页地址: spider_man.crawl(aodi)

(2) Url管理模块

维护爬取的url,跟未爬取的url地址

文件相对地址(文件名):奥迪/HtmlDownloader.py

#coding:utf-8 ''' url管理器 ''' class UrlManager(object): def __init__(self): self.new_urls = set() self.old_urls = set() def has_new_url(self): ''' 判断是否有url未被爬取 :return: ''' return self.new_url_size() != 0 def get_new_url(self): ''' 获取url :return: ''' if self.has_new_url(): new_url = self.new_urls.pop() self.old_urls.add(new_url) return new_url else: return None def add_new_url(self,url): ''' 增加url :param url: :return: ''' if url is None: return ''' 增加时去重跟判断以处理的url避免重复处理出现死循环 ''' if url not in self.new_urls and url not in self.old_urls: self.new_urls.add(url) def add_new_urls(self,urls): ''' 增加一组url :param urls: :return: ''' if urls is None or len(urls)==0: return for url in urls: self.add_new_url(url) def new_url_size(self): return len(self.new_urls) def old_url_size(self): return len(self.old_urls)

(3) HTML下载模块

负责下载url管理器中提供的未爬url链接并在html

文件相对地址(文件名):奥迪/HtmlDownloader.py

#coding:utf-8 import requests import chardet ''' html下载器 ''' class HtmlDownloader(object): def download(self,url): try: if url is None: return sessions = requests.session() sessions.headers[ 'User-Agent'] = 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_9_2) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/34.0.1847.131 Safari/537.36' r = sessions.get(url) if (r.status_code == 200): r.encoding = chardet.detect(r.content)["encoding"] return r.text return None except: print("downloader failed") if __name__ == "__main__": htmlDownloader = HtmlDownloader() url = "http://baike.baidu.com/view/284853.html" # https://baike.baidu.com/item/%E7%BD%91%E7%BB%9C%E7%88%AC%E8%99%AB/5162711?fr=aladdin url = "https://baike.baidu.com/item/%E7%BD%91%E7%BB%9C%E7%88%AC%E8%99%AB/5162711?fr=aladdin" # 奥迪 aodi = "http://car.bitauto.com/tree_chexing/mb_9/" print(htmlDownloader.download(aodi))

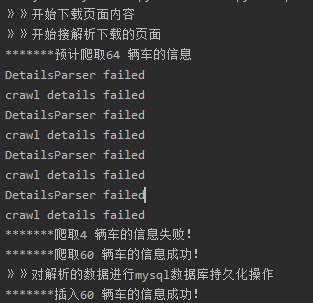

(4) HTML解析模块

解析下载器的html页面,并解析出有效数据,也可以解析跟进的url链接

内嵌一个小的详情页爬虫,包括DetailsParser.py,DetailsDownloader.py

文件相对地址(文件名):奥迪/HtmlParser.py

#coding:utf-8 import re import urlparser import urllib import urllib3 from bs4 import BeautifulSoup from DetailsDownloader import DetailsDownloader from DetailsParser import DetailsParser ''' html解释器 ''' class HtmlParser(object): def __init__(self): self.detailsDownloader = DetailsDownloader() self.detailsParser = DetailsParser() def parser(self,page_url,html_cont): ''' 用于解析网页内容,抽取URL和数据 :param page_url:下载页面的url :param html_cont:下载的网页内容 :return: ''' try: if page_url is None and html_cont is None: return soup = BeautifulSoup(html_cont, "html.parser") new_urls = self._get_new_urls(page_url, soup) new_datas = self._get_new_data(page_url, soup) return new_urls, new_datas except: print("paser failed") ''' 获取跟进地址方法 ''' def _get_new_urls(self,page_url,soup): ''' 抽取新的URL集合 :param page_url: :param soup: :return: ''' new_urls = set() # 抽取符合要求的a标签 links = soup.find_all('a', href=re.compile(r'/item/.*')) # print(links) for link in links: new_url = link["href"] #拼接成完整网址 # python 3 new_full_url = urllib.parse.urljoin(page_url, new_url) new_urls.add(new_full_url) return new_urls ''' 获取奥迪汽车信息 ''' def _get_new_data(self,page_url,soup): dataList = soup.find_all(class_='img-info-layout-vertical img-info-layout-vertical-center img-info-layout-vertical-180120') print("*******预计爬取%s 辆车的信息" % len(dataList)) items = [] i = 0 for dataTag in dataList: try: name = dataTag.find('div', class_='img').find_next_sibling(class_='p-list').find('a').string titleImgUrl = dataTag.find('div', class_='img').find('img')['src'] referencePrice = dataTag.find('div', class_='img').find_next_sibling(class_='p-list').find('li',class_='price').find('a').string #详情页信息 detailsUrl = dataTag.find('div', class_='img').find('a')['href'] detailsUrl = "http://car.bitauto.com" + detailsUrl detailsHtml = self.detailsDownloader.download(detailsUrl) detailsData = self.detailsParser.parser(detailsUrl, detailsHtml) detailsData['name'] = name detailsData['titleImgUrl'] = titleImgUrl detailsData['referencePrice'] = referencePrice items.append(detailsData) except: i=i+1 print("crawl details failed") print("*******爬取%s 辆车的信息失败!" % i) print("*******爬取%s 辆车的信息成功!" % len(items)) return items if __name__ == "__main__": pass

文件相对地址(文件名):奥迪/DetailsDownloader.py

#coding:utf-8 import requests import chardet class DetailsDownloader(object): def download(self, url): try: if url is None: return sessions = requests.session() sessions.headers[ 'User-Agent'] = 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_9_2) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/34.0.1847.131 Safari/537.36' r = sessions.get(url) if (r.status_code == 200): r.encoding = chardet.detect(r.content)["encoding"] return r.text return None except: print("DetailsDownloader failed") if __name__ == "__main__": pass

文件相对地址(文件名):奥迪/DetailsParser.py

#coding:utf-8 import re import urlparser import urllib import urllib3 from bs4 import BeautifulSoup ''' 奥迪详情html解释器 ''' class DetailsParser(object): def parser(self,page_url,html_cont): try: if page_url is None and html_cont is None: return soup = BeautifulSoup(html_cont, "html.parser") new_datas = self._get_new_data(page_url, soup) return new_datas except: print("DetailsParser failed") ''' 获取奥迪详细信息 ''' def _get_new_data(self, page_url, soup): contTag = soup.find('h5',id='factory-price').find_parent().find_parent() data = {} # 指导价格 data['guidePrice'] = soup.find('h5',id='factory-price').find('span',class_='price').string # 排量 data['displacement'] = contTag.find_next_sibling().select('li:nth-of-type(1)')[0].find('span',class_='data').string # 油耗 data['oilConsumption'] = contTag.find_next_sibling().select('li:nth-of-type(2)')[0].find('a',class_='data').string return data if __name__ == "__main__": pass

(5) 数据存储器

将解析器解析处理的数据持久化化到mysql数据库

(包括一个数据持久化模块MasqlHelper.py,跟一个实体类AODIItems.py)

文件相对地址(文件名):奥迪/DataOutput.py

#coding:utf-8 import codecs from MysqlHelper import MysqlHelper class DataOutput(object): def __init__(self): self.datas=[] self.host = "localhost" self.user = "root" self.password = "" self.database = "ai_info" self.charset = "utf-8" self.mh = MysqlHelper(self.host,self.user,self.password,self.database,self.charset) def store_data(self,data): if data is None: return self.datas = data def output_html(self): # codecs 可以方便的设计编码格式 fout=codecs.open('baike.html','w',encoding='utf-8') fout.write("<html>") fout.write("<head><meta charset='utf-8'/></head>") fout.write("<body>") fout.write("<table border='2'>") for data in self.datas: fout.write("<tr>") fout.write("<td>%s</td>"%data['url']) fout.write("<td>%s</td>"%data['title']) fout.write("<td>%s</td>"%data['summary']) fout.write("</tr>") fout.write("</table>") fout.write("</body>") fout.write("</html>") fout.close() def output_mysql(self): TABLE_NAME = "ad_data" sql = "insert into " + TABLE_NAME + " (name, titleImgUrl, referencePrice, guidePrice, displacement, oilConsumption) values(%s,%s,%s,%s,%s,%s)" rows = 0 for data in self.datas: name = data['name'] titleImgUrl = data['titleImgUrl'] referencePrice = data['referencePrice'] guidePrice = data['guidePrice'] displacement = data['displacement'] oilConsumption = data['oilConsumption'] params = (name, titleImgUrl, referencePrice, guidePrice, displacement, oilConsumption) row = self.mh.aud(sql,params) rows = rows + row print("*******插入%s 辆车的信息成功!" % rows) def find_output(self): TABLE_NAME = "ad_data" sql = "select * from " + TABLE_NAME print(self.mh.find(sql, None)) ''' 取轿车信息并转化为pandas 的数据帧类型存储 ''' def mysql_to_pandas(self): TABLE_NAME = "ad_data" sql = "select * from " + TABLE_NAME return self.mh.findPandas(sql)

文件相对地址(文件名):奥迪/MysqlHelper.py

import pymysql as ps import pandas as pd class MysqlHelper: def __init__(self, host, user, password, database, charset): self.host = host self.user = user self.password = password self.database = database self.charset = charset self.db = None self.curs = None # 数据库连接 def open(self): self.db = ps.connect(host=self.host, user=self.user, password=self.password,database=self.database) self.curs = self.db.cursor() # 数据库关闭 def close(self): self.curs.close() self.db.close() # 数据增删改 def aud(self, sql, params): self.open() try: row = self.curs.execute(sql, params) self.db.commit() self.close() return row except : print('cud出现错误') self.db.rollback() self.close() return 0 # 数据查询 def find(self, sql, params): self.open() try: df = pd.read_sql(sql=sql,con=self.db) print(df.head()) result = self.curs.execute(sql, params) self.close() return result except: print('find出现错误') # 解析为pandas def findPandas(self,sql): self.open() try: df = pd.read_sql(sql=sql,con=self.db) return df except: print('解析为pandas出现错误')

文件相对地址(文件名):奥迪/AODIItems.py

class AODIItems(object): def __init__(self,name,titleImgUrl,referencePrice,guidePrice,displacement,oilConsumption): self.name = name self.titleImgUrl = titleImgUrl self.referencePrice = referencePrice self.guidePrice = guidePrice self.displacement = displacement self.oilConsumption = oilConsumption

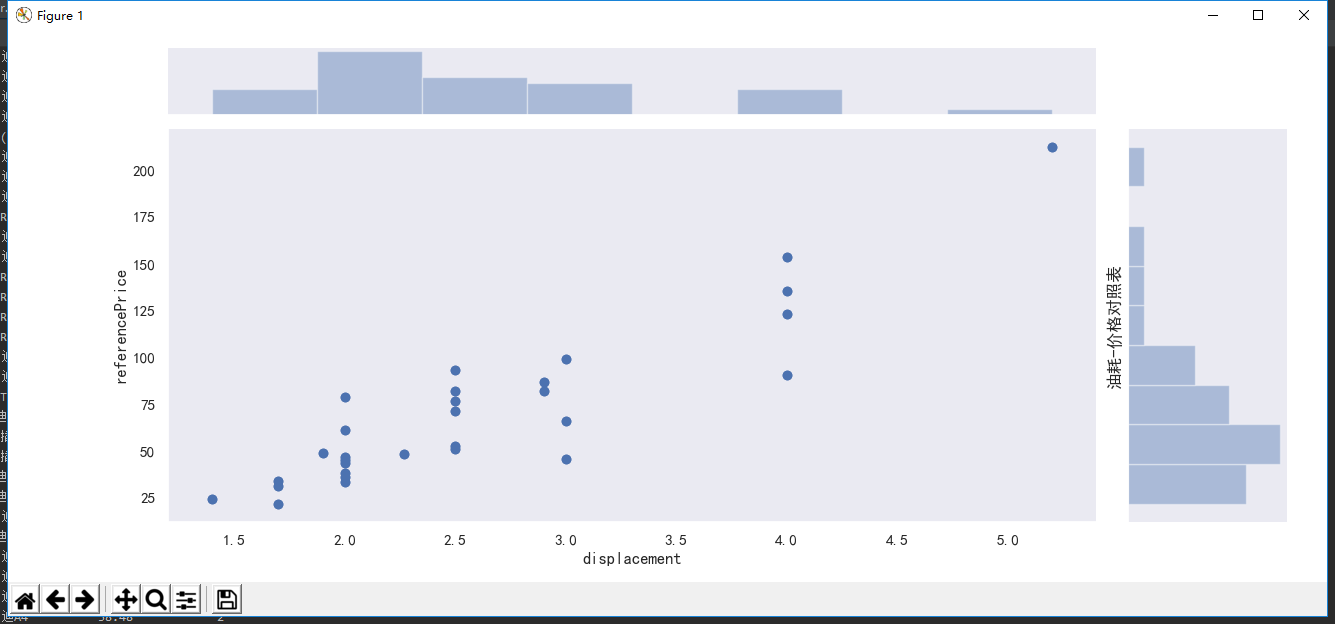

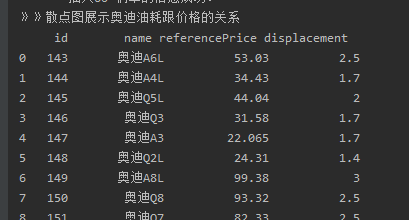

(6) 数据可视化模块

负责对数据库的数据进行可视化

文件相对地址(文件名):奥迪/SeabornShow.py

import seaborn as sns import pandas as pd import pymysql as ps import numpy as np import scipy.stats as sci import matplotlib.pyplot as plt import re # seaborn构建散点图 class SeabornShow(object): ''' 奥迪油耗参考价数据的展示函数 ''' def show(self,data): sns.set(style="dark") # 数据整理 for i in range(len(data)): # 油耗 取算数平均值 data.iloc[i, 5] = self.ave(self.analyticalFigures(data.iloc[i, 5])) # 参考价 取算数平均值 data.iloc[i, 3] = self.ave(self.analyticalFigures(data.iloc[i, 3])) print(data[['id','name','referencePrice','displacement']]) sns.jointplot(x='displacement',y='referencePrice',data=data) plt.rcParams['font.sans-serif'] = ['SimHei'] plt.ylabel("油耗-价格对照表") plt.show() def analyticalFigures(self,str): return re.compile(r'[\d.]+').findall(str) def ave(self,numList): result = 0 sum = 0.0 for num in numList: sum = sum + float(num) result = sum / len(numList) return result if __name__ == '__main__': seabornShow = SeabornShow() # seabornShow.show() rs = np.random.RandomState(2) df = pd.DataFrame(rs.randn(200, 2), columns=['A', 'B']) # print(df) sns.jointplot(x='A',y='B',data=df,kind='reg')

4、效果跟细节

(1)代码运行步骤:

(2)散点图展示奥迪油耗跟价格的关系

(3)数据库数据表

(4)数据显示