后续的课程都将应用实现,所以很多东西需要模块化

搭建模块化的神经网络八股:

前向传播就是搭建网络,设计网络结构(forward.py)

反向传播就是训练网络,优化网络 参数(backward.py)

分.成了三个模块:

generate.py

import tensorflow as tf

import numpy as np

import matplotlib.pyplot as plt

seed=2

def generate():

#基于seed产生随机数

rdm=np.random.RandomState(seed)

#随机数返回300行2列的矩阵,表示300组坐标点(x0,x1)作为输入数据集

X=rdm.randn(300,2)

#从X这个300行2列的矩阵中取出一行,判断如果两个坐标的平方和小于2,给Y赋值1,其余赋值0

#作为输入数据集的标签

Y_=[int(x0*x0+x1*x1<2) for (x0,x1) in X]

#遍历Y中每个元素,1赋值'red'其余赋值'blue',这样可视化显示时人可以直观区分

Y_COLOR=[['red' if y else 'blue']for y in Y_]

#对数据集X和标签Y进行shape整理,第一个元素为-1表示,随第二个参数计算得到,第二个元素表示多少列,把X整理为n行2列,把Y整理为n行1列

X=np.vstack(X).reshape(-1,2)

Y_=np.vstack(Y_).reshape(-1,1)

#print(X)

#print(Y_)

#画图,数据集X各行中第0列元素和第1列元素的点即各行的(x0,x1),用y_color对应的值显示颜色

#plt.scatter(X[:,0],X[:,1],c=np.squeeze(Y_COLOR))

#plt.show()

return X,Y_,Y_COLORforward.py

import tensorflow as tf

def forward(x,regularizer):

w1=get_weight([2,11],0.01)

b1=get_bias([11])

y1=tf.nn.relu(tf.matmul(x,w1)+b1)

w2=get_weight([11,1],0.01)

b2=get_bias([1])

y=tf.matmul(y1,w2+b2)#输出层不过激活函数

return y

def get_weight(shape,regularizer):

w=tf.Variable(tf.random_normal(shape),dtype=tf.float32)

tf.add_to_collection('losses',tf.contrib.layers.l2_regularizer(regularizer)(w))

return w

def get_bias(shape):

b=tf.Variable(tf.constant(0.01,shape=shape))

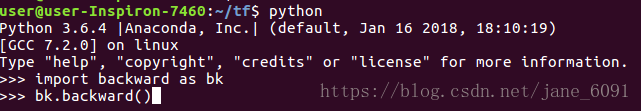

return bbackward.py

import tensorflow as tf

import numpy as np

import matplotlib.pyplot as plt

import generate as gr

import forward as fw

STEPS = 40000

BATCH_SIZE = 30

LEARNING_RATE_BASE = 0.001

LEARNING_RATE_DECAY = 0.999

REGULARIZER = 0.01

def backward():

x=tf.placeholder(tf.float32,shape=(None,2))

y_=tf.placeholder(tf.float32,shape=(None,1))

X,Y_,Y_C=gr.generate()

y=fw.forward(x,REGULARIZER)

global_step=tf.Variable(0,trainable=False)

#定义损失函数

loss_mse=tf.reduce_mean(tf.square(y-y_))

loss_total=loss_mse+tf.add_n(tf.get_collection('losses'))

#loss可以是y与y_之间的距离或距离的平方

#也可以是交叉熵

#ce=tf.nn.sparse_softmax_cross_entropy_with_logits(logits=y,labels=tf.argmax(y_,1))

#cem=tf.reduce_mean(ce)

#可以正则化,加上下面这句

#loss=y与y_的差距+tf.add_n(tf.get_collection('losses'))

#采用指数衰减的学习率

learning_rate=tf.train.exponential_decay(LEARNING_RATE_BASE,global_step,BATCH_SIZE,LEARNING_RATE_DECAY,staircase=True)

train_step=tf.train.GradientDescentOptimizer(learning_rate).minimize(loss_mse,global_step=global_step)

#滑动平均

#ema=tf.train.ExponentialMovingAverage(MOVING_AVERAGE_DECAY,global_step)

#ema_op=ema.apply(tf.trainable_variables())

#with tf.control_dependencies([train_step,ema_op]):

# train_op=tf.no_op(name='train')

#训练

with tf.Session() as sess:

init_op=tf.global_variables_initializer()

sess.run(init_op)

for i in range(STEPS):

start=(i*BATCH_SIZE)%200

end=start+BATCH_SIZE

sess.run(train_step,feed_dict={x:X[start:end],y_:Y_[start:end]})

if i%2000 ==0:

loss_v=sess.run(loss_total,feed_dict={x:X,y_:Y_})

print("after %d steps:,loss is :%f",i,loss_v)

xx, yy = np.mgrid[-3:3:.01, -3:3:.01]

grid = np.c_[xx.ravel(), yy.ravel()]

probs = sess.run(y, feed_dict={x:grid})

probs = probs.reshape(xx.shape)

plt.scatter(X[:,0], X[:,1], c=np.squeeze(Y_C))

plt.contour(xx, yy, probs, levels=[.5])

plt.show()

#if _name_=='_main_':

# backward()最后运行