这篇文章主要参考https://github.com/moka11moka/kmeans-python/tree/master/kmeans点击打开链接

https://www.cnblogs.com/pinard/p/6169370.html点击打开链接

第一种是简单的二维数据集生成及kmeans聚类算法原理实现,第二种是借助sklearn实现聚类数据集生成及kmeans算法实现,并探索k值个数选择

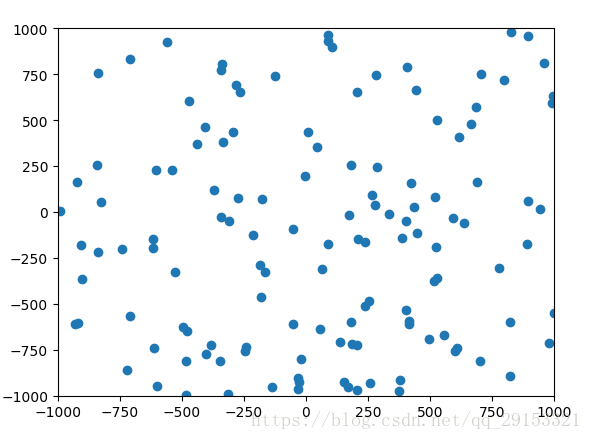

其中randPoint.py,能生成130个随机的x、y二维数据点

# -*- coding:utf-8 -*-

from numpy import *

import matplotlib.pyplot as plt

# 用于生成随机的样本点

def randPoint():

x = []

y = []

for i in range(130):

a = random.uniform(-1000, 1000)

b = random.uniform(-1000, 1000)

x.append(a)

y.append(b)

f = open('C:/Users/Administrator/Desktop/kmeans-python-master/kmeans-python-master/kmeans/point.txt', 'w') # 路径,可自行修改

for i, j in zip(x, y):

f.write((i, j).__str__()+'\n')

f.close()

plt.xlim(-1000, 1000)

plt.ylim(-1000, 1000)

plt.scatter(x, y)

plt.show()

if __name__ == '__main__':

randPoint()结果如图

利用上述这些点进行聚类,当然也可以自行提供数据

# -*- coding:utf-8 -*-

# 实现kmeans聚类算法

from numpy import *

import matplotlib.pyplot as plt

import copy

# 利用numpy的random生成随机的一个数组

# 然后描点

def describe_point():

a = random.randn(1000)

b = [i*1000 for i in a]

copy.deepcopy(b)

random.shuffle(b)

f = open('C:/Users/Administrator/Desktop/kmeans-python-master/kmeans-python-master/kmeans/point.txt', 'w')

for i, j in zip(a, b):

f.write((i, j).__str__()+'\n')

plt.plot(a, b, 'ro')

plt.show()

# 将文本中的点加载到一个矩阵中

def loadDateSet(fileName):

dataMat = []

f = open(fileName, 'r')

for line in f.readlines():

curLine = line.replace('(', '').replace(')', '')

curLine = curLine.strip().split(',')

resultSet = [float(i) for i in curLine]

dataMat.append(resultSet)

return dataMat

# 在样本中随机取k个点作为初始质心

def initCentroids(dataSet, k):

# 矩阵的行数、列数

num, dim = dataSet.shape

centroids = zeros((k, dim))

for i in range(k):

index = int(random.uniform(0, num))

centroids[i, :] = dataSet[index, :]

return centroids

# 欧拉距离

def euclDistance(vector1, vector2):

return sqrt(sum(power(vector2 - vector1, 2)))

# kmeans主要原理实现

def kmeans(dataSet, k, distant=euclDistance, createCent=initCentroids):

# 得到样本数

m = shape(dataSet)[0]

# 初始化一个m*2的矩阵, 用于存放点所属质心,和距离

cluster = mat(zeros((m, 2)))

# 初始化k个质心

centerPoint = createCent(dataSet, k)

# 用于判断是否确定所属的质心

isTrue = True

while isTrue:

isTrue = False

for i in range(m): # 遍历所有样本

min_dis = inf; min_index = -1 # 初始化最小值

for j in range(k):

# 求出每一个质心点到样本点的欧拉距离,最后将距离最小的放入到数组cluster中

disIJ = distant(dataSet[i, :], centerPoint[j, :])

if disIJ < min_dis:

min_dis = disIJ; min_index = j # 找出距离当前样本最近的那个质心

a = cluster[i, :0]

if cluster[i, 0] != min_index: #此处做了修改

# if cluster[i, :0] != min_dis:

isTrue = True # 更新当前样本点所属于的质心,如果当前样本点不属于当前与之距离最小的质心,则说明簇分配结果仍需要改变

cluster[i, :] = min_index, min_dis**2

print(centerPoint)

# 用于求出某个质心点下的所有样本点的平均值,赋值为新的质心点,此处做了修改

for cent in range(k):

pointCluster = dataSet[nonzero(cluster[:, 0].A==cent)[0]] # 去第一列等于cent的所有列

centerPoint[cent, :] = mean(pointCluster, axis=0)

return cluster, centerPoint

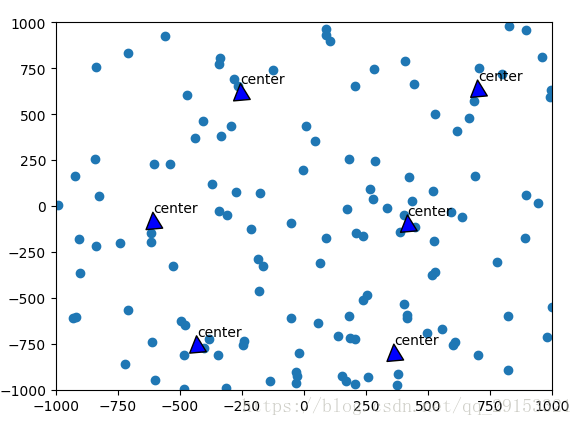

# 画图,将点显示在平面中

def draw(dataSet, center):

center_num = len(center)

fig = plt.figure

# 在图上描点

plt.xlim(-1000, 1000)

plt.ylim(-1000, 1000)

x = dataSet[:, 0].tolist()

y = dataSet[:, 1].tolist()

plt.scatter(x, y)

for i in range(center_num):

# 给箭头符号做注释

plt.annotate('center', xy=(center[i, 0], center[i, 1]), xytext= \

(center[i, 0] + 1, center[i, 1] + 1), arrowprops=dict(facecolor='blue'))

plt.show()

# 测试

if __name__ == '__main__':

dataMat = mat(loadDateSet('C:/Users/Administrator/Desktop/kmeans-python-master/kmeans-python-master/kmeans/point.txt'))

dataSet, center = kmeans(dataMat, 6)

draw(dataMat, center)

其中原作者的kmeans函数应该是有些问题,对此进行了修改,在重新计算重心方面,这篇文章定义的质心点是6个,为什么是6个并没有说明。

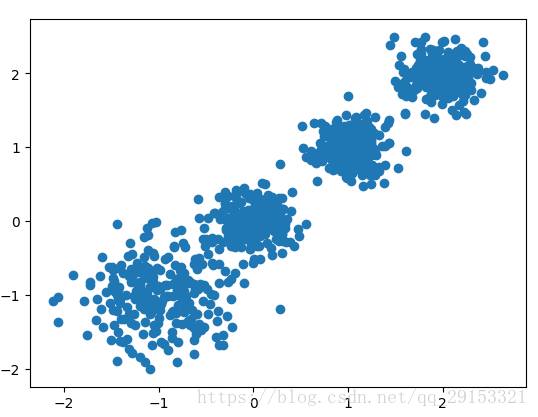

接下来继续探索第二种方法,为了确定质心的k值个数及运用sklearn,首先生成聚类的数据集

import numpy as np

import matplotlib.pyplot as plt

from sklearn.datasets.samples_generator import make_blobs

# 生成样本,X为样本特征,Y为样本簇类别, 共1000个样本,每个样本4个特征,共4个簇,簇中心在[-1,-1], [0,0],[1,1], [2,2], 簇方差分别为[0.4, 0.2, 0.2]

# random_state为随机种子

X, y = make_blobs(n_samples=1000, n_features=2, centers=[[-1,-1], [0,0], [1,1], [2,2]], cluster_std=[0.4, 0.2, 0.2, 0.2],

random_state =9)

plt.scatter(X[:, 0], X[:, 1], marker='o')

plt.show()进行kmeans分类,kmeans类

from sklearn.cluster import KMeans

from sklearn import metrics

# y_pred = KMeans(n_clusters=3, random_state=9).fit_predict(X)

# plt.scatter(X[:, 0], X[:, 1], c=y_pred)

# plt.show()

#

# print(metrics.calinski_harabaz_score(X, y_pred)) # Calinski-Harabasz分数值

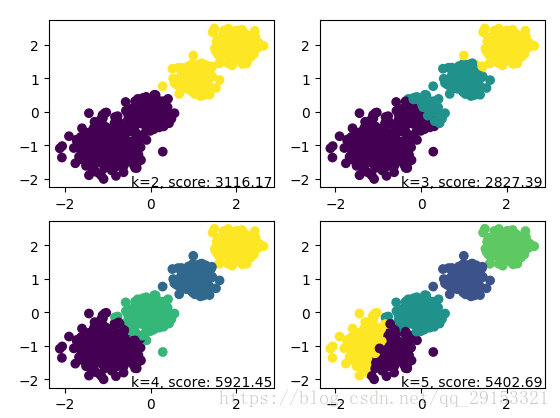

for index, k in enumerate((2,3,4,5)):

plt.subplot(2,2,index+1)

y_pred = KMeans(n_clusters=k, random_state=9).fit_predict(X)

score= metrics.calinski_harabaz_score(X, y_pred)

plt.scatter(X[:, 0], X[:, 1], c=y_pred)

plt.text(.99, .01, ('k=%d, score: %.2f' % (k,score)),

transform=plt.gca().transAxes, size=10,

horizontalalignment='right')

plt.show()或第二种,MiniBatchKMeans类,与上参数略有不同,可自行了解。

from sklearn.cluster import MiniBatchKMeans

for index, k in enumerate((2,3,4,5)):

plt.subplot(2,2,index+1)

y_pred = MiniBatchKMeans(n_clusters=k, batch_size = 200, random_state=9).fit_predict(X)

score= metrics.calinski_harabaz_score(X, y_pred)

plt.scatter(X[:, 0], X[:, 1], c=y_pred)

plt.text(.99, .01, ('k=%d, score: %.2f' % (k,score)),

transform=plt.gca().transAxes, size=10,

horizontalalignment='right')

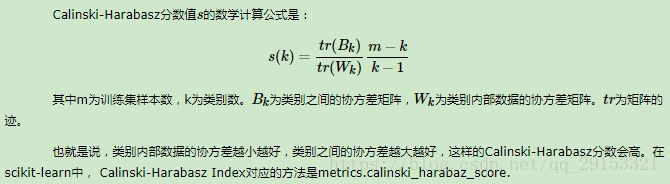

plt.show()该文中两种方法都是k=4时Calinski-Harabasz分数值最大,它可以作为k值选择标准,表示的是

至此完成了聚类算法的简单实现及研究。