一、on policy & off policy

所有的学习控制都面临着一个困境,他们希望学到的动作可以使随后的智能体行为是最优的,但为了搜索所有的动作(已找到最优动作),他们需要采取非最优的行动,如何在遵循探索策略采取行动的同时学到最优策略呢?

第一种方式是:on policy,这种策略其实是一种妥协——他并不是找到最优的策略,而是学习一个接近最优而且扔能进行试探的策略动作值。

另一种方式是:off policy,这种方式干脆使用两种策略,一个用来学习并最终称为最优策略,另一个则更加具有试探性,用来产生智能体的行动样本。用来学习的策略被称为 目标策略,用于生成行动样本的被称为 行动策略。

on policy 更加简单,更加容易收敛,但off policy则更加符合想象力,能处理一些on policy不能做的一些活动。

比如:机器人想要炸一个国家,但手上只有两颗炸弹,炸哪两个城市最好,只能通过off policy的方式,因为炸弹炸了就没了,机器人只能通过想象力去试探炸那里更好,on policy由于做决策的策略和生成行动样本的策略是一个,所以不具有这样的想象力。

二、蒙特卡洛on and off policy control

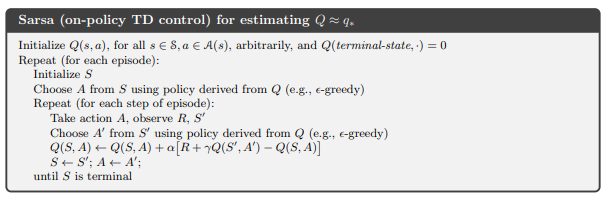

三、sarsa

sarsa是TD 算法中 on policy的一个案例

import numpy as np import pandas as pd import matplotlib.pyplot as plt from matplotlib.colors import hsv_to_rgb # ENV -- WORLD_HEIGHT = 4 WORLD_WIDTH =12 NUM_STATE = WORLD_WIDTH * WORLD_HEIGHT # ACTION -- UP = 0 DOWN = 1 LEFT = 2 RIGHT = 3 NUM_ACTIONS = 4 ACTION = [UP, DOWN, RIGHT, LEFT] ACTIONS = ['U', 'D', 'R', 'L'] # STATE -- START = (3,0) END = (3,11) def change_range(values, vmin=0, vmax=1): start_zero = values - np.min(values) return (start_zero / (np.max(start_zero) + 1e-7)) * (vmax - vmin) + vmin class ENVIRONMENT: terrain_color = dict(normal=[127/360, 0, 96/100], objective=[26/360, 100/100, 100/100], cliff=[247/360, 92/100, 70/100], player=[344/360, 93/100, 100/100]) def __init__(self): self.player = None self.num_steps = 0 self.CreateEnv() self.DrawEnv() def CreateEnv(self, inital_grid = None): # create cliff walking grid world # just such as the following grid ''' 0 11 0 x x x x x x x x x x x x 1 x x x x x x x x x x x x 2 x x x x x x x x x x x x 3 S o o o o o o o o o o E ''' self.grid = self.terrain_color['normal'] * np.ones((WORLD_HEIGHT, WORLD_WIDTH, 3)) self.grid[-1, 1:11] = self.terrain_color['cliff'] self.grid[-1,-1] = self.terrain_color['objective'] def DrawEnv(self): self.fig, self.ax = plt.subplots(figsize=(WORLD_WIDTH, WORLD_HEIGHT)) self.ax.grid(which='minor') self.q_texts = [self.ax.text( i%WORLD_WIDTH, i//WORLD_WIDTH, '0', fontsize=11, verticalalignment='center', horizontalalignment='center') for i in range(12 * 4)] self.im = self.ax.imshow(hsv_to_rgb(self.grid), cmap='terrain', interpolation='nearest', vmin=0, vmax=1) self.ax.set_xticks(np.arange(WORLD_WIDTH)) self.ax.set_xticks(np.arange(WORLD_WIDTH) - 0.5, minor=True) self.ax.set_yticks(np.arange(WORLD_HEIGHT)) self.ax.set_yticks(np.arange(WORLD_HEIGHT) - 0.5, minor=True) # plt.show() def step(self, action): # Possible actions if action == 0 and self.player[0] > 0: self.player = (self.player[0] - 1, self.player[1]) if action == 1 and self.player[0] < 3: self.player = (self.player[0] + 1, self.player[1]) if action == 2 and self.player[1] < 11: self.player = (self.player[0], self.player[1] + 1) if action == 3 and self.player[1] > 0: self.player = (self.player[0], self.player[1] - 1) self.num_steps = self.num_steps + 1 # Rewards, game on # common situation: reward = -1 & game can carry on reward = -1 done = False # if walk to the cliff, game over and loss, reward = -100 # if walk to the destination, game over and win, reward = 0 if self.player[0] == WORLD_HEIGHT-1 and self.player[1] > 0 and self.player[1] < WORLD_WIDTH-1: reward = -100 done = True elif self.player[0] == END[0] and self.player[1] == END[1]: reward = 0 done = True return self.player, reward, done def reset(self): self.player = [START[0], START[1]] self.num_steps = 0 return self.player def RenderEnv(self, q_values, action=None, max_q=False, colorize_q=False): assert self.player is not None, 'You first need to call .reset()' if colorize_q: grid = self.terrain_color['normal'] * np.ones((4, 12, 3)) values = change_range(np.max(q_values, -1)).reshape(4, 12) grid[:, :, 1] = values grid[-1, 1:11] = self.terrain_color['cliff'] grid[-1,-1] = self.terrain_color['objective'] else: grid = self.grid.copy() # render the player grid grid[self.player] = self.terrain_color['player'] self.im.set_data(hsv_to_rgb(grid)) if q_values is not None: xs = np.repeat(np.arange(12), 4) ys = np.tile(np.arange(4), 12) for i, text in enumerate(self.q_texts): txt = "" for aaction in range(len(ACTIONS)): txt += str(ACTIONS[aaction]) + ":" + str( round(q_values[ i//WORLD_WIDTH, i%WORLD_WIDTH, aaction], 2) ) + '\n' text.set_text(txt) # show the action if action is not None: self.ax.set_title(action, color='r', weight='bold', fontsize=32) plt.pause(0.1) def egreedy_policy( q_values, state, epsilon=0.1): if np.random.binomial(1, epsilon) == 1: return np.random.choice(ACTION) else: values_ = q_values[state[0], state[1], :] return np.random.choice([action_ for action_, value_ in enumerate(values_) if value_ == np.max(values_)]) def sarsa(env, episodes=500, render=True, epsilon=0.1, learning_rate=0.5, gamma=0.9): q_values_sarsa = np.zeros((WORLD_HEIGHT, WORLD_WIDTH, NUM_ACTIONS)) ep_rewards = [] # sarsa begin... for _ in range(0,episodes): state = env.reset() done = False reward_sum = 0 action = egreedy_policy(q_values_sarsa, state, epsilon) while done == False: next_state, reward, done = env.step(action) next_action = egreedy_policy(q_values_sarsa, next_state, epsilon) # 普通sarsa q_values_sarsa[state[0], state[1], action] += learning_rate * (reward + gamma * q_values_sarsa[next_state[0], next_state[1], next_action] - q_values_sarsa[state[0], state[1], action]) # 期望 sarsa # q_values_sarsa[state[0], state[1], action] += learning_rate * (reward + gamma * q_values_sarsa[next_state[0], next_state[1], next_action] - q_values_sarsa[state[0], state[1], action]) state = next_state action = next_action # for comparsion, record all the rewards, this is not necessary for QLearning algorithm reward_sum += reward if render: env.RenderEnv(q_values_sarsa, action=ACTIONS[action], colorize_q=True) ep_rewards.append(reward_sum) # sarsa end... return ep_rewards, q_values_sarsa def play(q_values): # simulate the environent using the learned Q values env = ENVIRONMENT() state = env.reset() done = False while not done: # Select action action = egreedy_policy(q_values, state, 0.0) # Do the action state_, R, done = env.step(action) # Update state and action state = state_ env.RenderEnv(q_values=q_values, action=ACTIONS[action], colorize_q=True) env = ENVIRONMENT() sarsa_rewards, q_values_sarsa = sarsa(env, episodes=500, render=False, epsilon=0.1, learning_rate=1, gamma=0.9) play(q_values_sarsa)四、Q-learning

Q-learning是TD 算法中 on policy的一个案例

# 总悬崖案例 Q-learning代码 # import numpy as np import pandas as pd import matplotlib.pyplot as plt from matplotlib.colors import hsv_to_rgb # ENV -- WORLD_HEIGHT = 4 WORLD_WIDTH =12 NUM_STATE = WORLD_WIDTH * WORLD_HEIGHT # ACTION -- UP = 0 DOWN = 1 LEFT = 2 RIGHT = 3 NUM_ACTIONS = 4 ACTION = [UP, DOWN, RIGHT, LEFT] ACTIONS = ['U', 'D', 'R', 'L'] # STATE -- START = (3,0) END = (3,11) def change_range(values, vmin=0, vmax=1): start_zero = values - np.min(values) return (start_zero / (np.max(start_zero) + 1e-7)) * (vmax - vmin) + vmin class ENVIRONMENT: terrain_color = dict(normal=[127/360, 0, 96/100], objective=[26/360, 100/100, 100/100], cliff=[247/360, 92/100, 70/100], player=[344/360, 93/100, 100/100]) def __init__(self): self.player = None self.num_steps = 0 self.CreateEnv() self.DrawEnv() def CreateEnv(self, inital_grid = None): # create cliff walking grid world # just such as the following grid ''' 0 11 0 x x x x x x x x x x x x 1 x x x x x x x x x x x x 2 x x x x x x x x x x x x 3 S o o o o o o o o o o E ''' self.grid = self.terrain_color['normal'] * np.ones((WORLD_HEIGHT, WORLD_WIDTH, 3)) self.grid[-1, 1:11] = self.terrain_color['cliff'] self.grid[-1,-1] = self.terrain_color['objective'] def DrawEnv(self): self.fig, self.ax = plt.subplots(figsize=(WORLD_WIDTH, WORLD_HEIGHT)) self.ax.grid(which='minor') self.q_texts = [self.ax.text( i%WORLD_WIDTH, i//WORLD_WIDTH, '0', fontsize=11, verticalalignment='center', horizontalalignment='center') for i in range(12 * 4)] self.im = self.ax.imshow(hsv_to_rgb(self.grid), cmap='terrain', interpolation='nearest', vmin=0, vmax=1) self.ax.set_xticks(np.arange(WORLD_WIDTH)) self.ax.set_xticks(np.arange(WORLD_WIDTH) - 0.5, minor=True) self.ax.set_yticks(np.arange(WORLD_HEIGHT)) self.ax.set_yticks(np.arange(WORLD_HEIGHT) - 0.5, minor=True) # plt.show() def step(self, action): # Possible actions if action == 0 and self.player[0] > 0: self.player = (self.player[0] - 1, self.player[1]) if action == 1 and self.player[0] < 3: self.player = (self.player[0] + 1, self.player[1]) if action == 2 and self.player[1] < 11: self.player = (self.player[0], self.player[1] + 1) if action == 3 and self.player[1] > 0: self.player = (self.player[0], self.player[1] - 1) self.num_steps = self.num_steps + 1 # Rewards, game on # common situation: reward = -1 & game can carry on reward = -1 done = False # if walk to the cliff, game over and loss, reward = -100 # if walk to the destination, game over and win, reward = 0 if self.player[0] == WORLD_HEIGHT-1 and self.player[1] > 0 and self.player[1] < WORLD_WIDTH-1: reward = -100 done = True elif self.player[0] == END[0] and self.player[1] == END[1]: reward = 0 done = True return self.player, reward, done def reset(self): self.player = [START[0], START[1]] self.num_steps = 0 return self.player def RenderEnv(self, q_values, action=None, max_q=False, colorize_q=False): assert self.player is not None, 'You first need to call .reset()' if colorize_q: grid = self.terrain_color['normal'] * np.ones((4, 12, 3)) values = change_range(np.max(q_values, -1)).reshape(4, 12) grid[:, :, 1] = values grid[-1, 1:11] = self.terrain_color['cliff'] grid[-1,-1] = self.terrain_color['objective'] else: grid = self.grid.copy() # render the player grid grid[self.player] = self.terrain_color['player'] self.im.set_data(hsv_to_rgb(grid)) if q_values is not None: xs = np.repeat(np.arange(12), 4) ys = np.tile(np.arange(4), 12) for i, text in enumerate(self.q_texts): txt = "" for aaction in range(len(ACTIONS)): txt += str(ACTIONS[aaction]) + ":" + str( round(q_values[ i//WORLD_WIDTH, i%WORLD_WIDTH, aaction], 2) ) + '\n' text.set_text(txt) # show the action print(action) if action is not None: self.ax.set_title(action, color='r', weight='bold', fontsize=32) plt.pause(0.1) def egreedy_policy( q_values, state, epsilon=0.1): if np.random.binomial(1, epsilon) == 1: return np.random.choice(ACTION) else: values_ = q_values[state[0], state[1], :] return np.random.choice([action_ for action_, value_ in enumerate(values_) if value_ == np.max(values_)]) def Qlearning(env, episodes=500, render=True, epsilon=0.1, learning_rate=0.5, gamma=0.9): q_values = np.zeros((WORLD_HEIGHT, WORLD_WIDTH, NUM_ACTIONS)) ep_rewards = [] # Qlearning begin... for _ in range(0,episodes): state = env.reset() done = False reward_sum = 0 while done == False: action = egreedy_policy(q_values, state, epsilon) next_state, reward, done = env.step(action) q_values[state[0], state[1], action] += learning_rate * (reward + gamma * np.max(q_values[next_state[0], next_state[1], :]) - q_values[state[0], state[1], action]) state = next_state # for comparsion, record all the rewards, this is not necessary for QLearning algorithm reward_sum += reward if render: env.RenderEnv(q_values, action=ACTIONS[action], colorize_q=True) ep_rewards.append(reward_sum) # Qlearning end... return ep_rewards, q_values def play(q_values): # simulate the environent using the learned Q values env = ENVIRONMENT() state = env.reset() done = False while not done: # Select action action = egreedy_policy(q_values, state, 0.0) # Do the action state_, R, done = env.step(action) # Update state and action state = state_ env.RenderEnv(q_values=q_values, action=ACTIONS[action], colorize_q=True) env = ENVIRONMENT() q_learning_rewards, q_values = Qlearning(env, episodes=500, render=False, epsilon=0.1, learning_rate=1, gamma=0.9) play(q_values)

强化学习 Sarsa & Q-learning:on & off policy策略下的时序差分控制

猜你喜欢

转载自blog.csdn.net/qq_36336522/article/details/107871244

今日推荐

周排行